Test Framework Modules

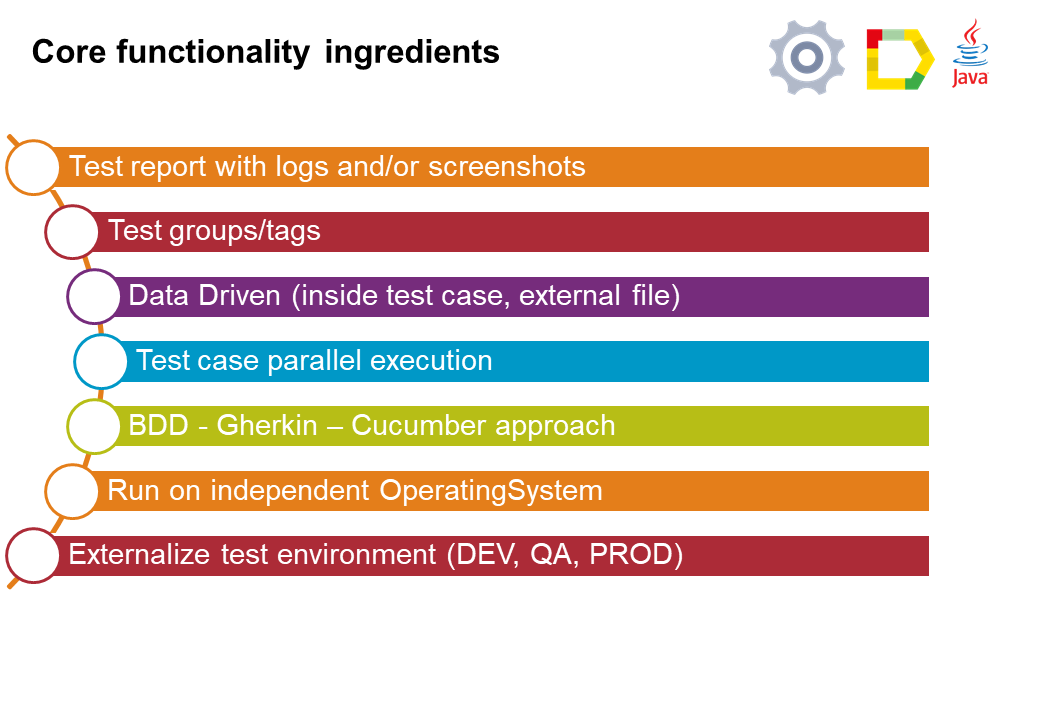

In this section, it is possible to find all the information regarding the main modules of MrChecker:

Core Test Module

Core Test Module

What is Core Test Module

Core Test Module Functions

How to start?

Read: Framework Test Class

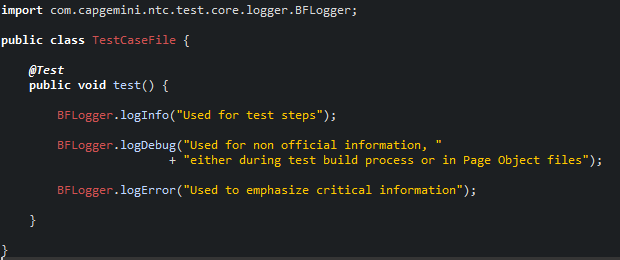

Allure Logger → BFLogger

In Allure E2E Test Framework you have ability to use and log any additional information crucial for:

-

test steps

-

test exection

-

page object actions, and many more.

Where to find saved logs

Every logged information is saved in a separate test file, as a result of parallel tests execution.

The places they are saved:

-

In test folder C:\Allure_Test_Framework\allure-app-under-test\logs

-

In every Allure Test report, logs are always embedded as an attachment, according to test run.

How to use logger:

-

Start typing

BFLogger -

Then type . (dot)

Type of logger:

-

BFLogger.logInfo("Your text")- used for test steps -

BFLogger.logDebug("Your text")- used for non official information, either during test build process or in Page Object files -

BFLogger.logError("Your text")- used to emphasize critical information

Console output:

Allure Reports

Allure is a tool designed for test reports.

You can generate a report using one of the following commands:

Since mrchecker-core-module version 5.6.2.1:

mvn test allure:serve -Dgroups=TestsTag1

Prior to mrchecker-core-module version 5.6.2.1:

mvn test allure:serve -Dtest=TS_Tag1

A report will be generated into temp folder. Web server with results will start. You can additionally configure the server timeout. The default value is "3600" (one hour).

System property allure.serve.timeout.

Since mrchecker-core-module version 5.6.2.1:

mvn test allure:report -Dgroups=TestsTag1

Prior to mrchecker-core-module version 5.6.2.1:

mvn test allure:report -Dtest=TS_Tag1

A report will be generated tо directory: target/site/allure-maven/index.html

NOTE: Please open index.html file under Firefox. Chrome has some limitations to presenting dynamic content. If you want to open a report with a Chromium based Web Browser, you need to launch it first with --allow-file-access-from-files argument.

A report is created here allure-app-under-test\target\site\allure-report\index.html

NOTE: Please open index.html file under Firefox. Chrome has some limitations to presenting dynamic content. If you want to open a report with a Chromium based Web Browser, you need to launch it first with --allow-file-access-from-files argument.

In our case, we’ll use the Allure Jenkins plugin. When integrating Allure in a Jenkins job configuration, we’ll have direct access to the build’s test report.

There are several ways to access the Allure Test Reports:

-

Using the "Allure Report" button on the left navigation bar or center of the general job overview

-

Using the "Allure Report" button on the left navigation bar or center of a specific build overview

Afterwards you’ll be greeted with either the general Allure Dashboard (showing the newest build) or the Allure Dashboard for a specific (older) build.

Allure dashboard

The Dashboard provides a graphical overview on how many test cases were successful, failed or broken.

-

Passed means, that the test case was executed successfully.

-

Broken means, that there were mistakes, usually inside of the test method or test class. As tests are being treated as code, broken code has to be expected, resulting in occasionally broken test results.

-

Failed means that an assertion failed.

Defects

The defects tab lists out all the defects that occurred, and also descriptions thereof. Clicking on a list item displays the test case which resulted in an error. Clicking on a test case allows the user to have a look at the test case steps, as well as Log files or Screenshots of the failure.

Graph

The graph page includes a pie chart of all tests, showing their result status (failed, passed, etc.). Another graph allows insight into the time elapsed during the tests. This is a very useful information to find and eliminate possible bottlenecks in test implementations.

Why join Test Cases in groups - Test Suites

Regresion Suite:

Regression testing is a type of software testing which verifies that software which was previously developed and tested still performs the same way after it was changed or interfaced with another software.

-

Business vital functionalities

-

Full scope of test cases

Functional Suite:

-

Smoke

-

Business function A

-

Business function B

Single Responsibility Unit:

-

Single page

-

Specific test case

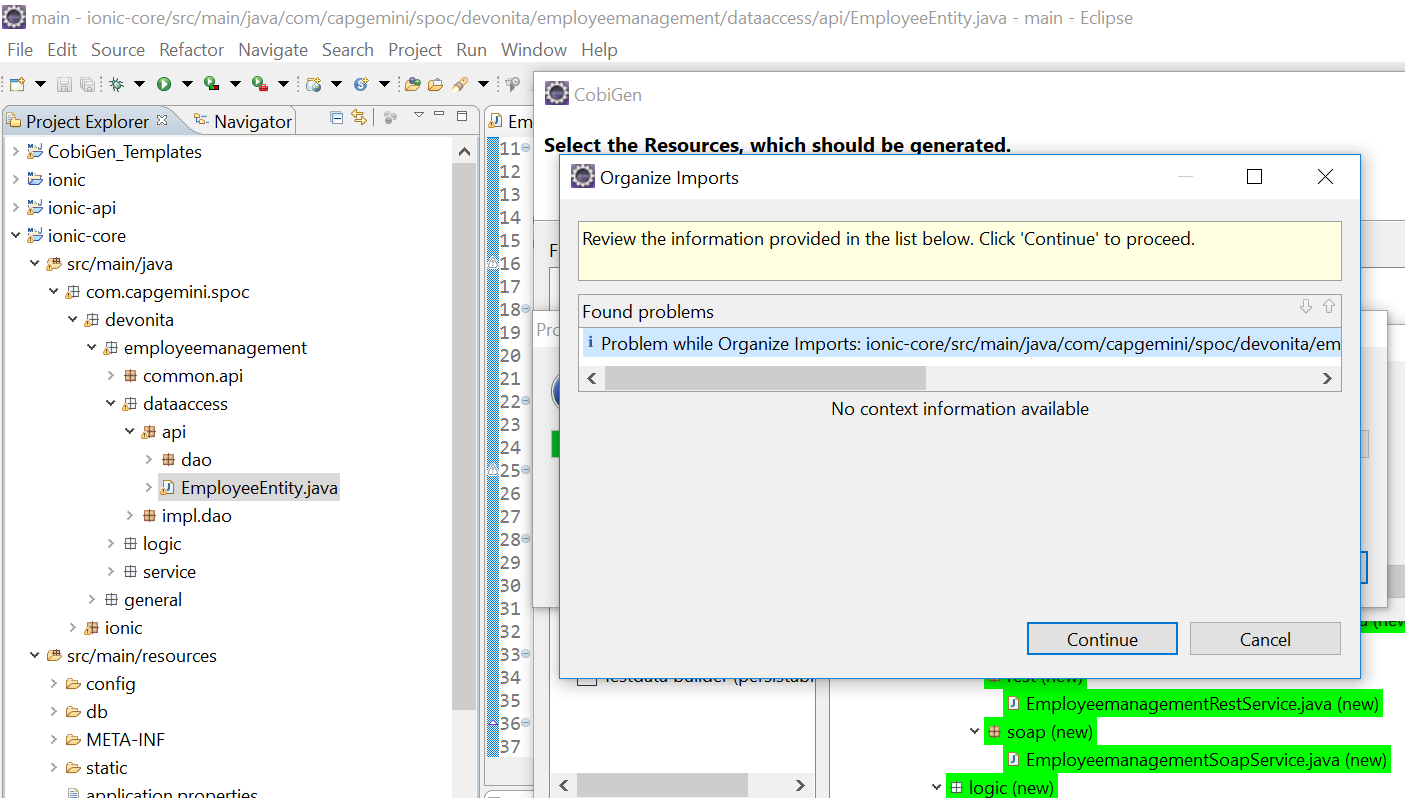

How to build a Test Suite based on tags

Structure of the Test Suite

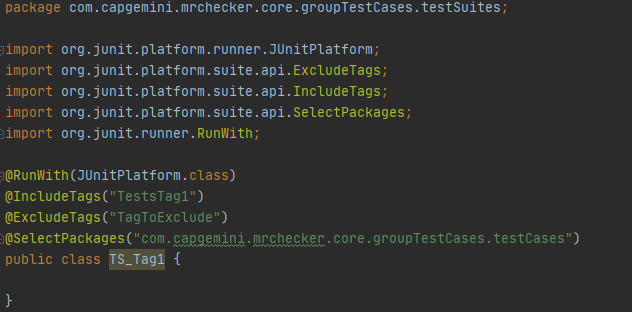

Since mrchecker-core-module version 5.6.2.1:

Where:

-

@RunWith(JUnitPlatform.class)- use Junit5 runner -

@IncludeTags({"TestsTag1"})- search all test files with the tag"TestsTag1" -

@ExcludeTags({"TagToExclude"})- exclude test files with the tag"TagToExclude" -

@SelectPackages("com.capgemini.mrchecker.core.groupTestCases.testCases")- search only test files in"com.capgemini.mrchecker.core.groupTestCases.testCases"package -

public class TS_Tag1- the name of the Test Suite is "TS_Tag1"

Most commonly used filters to build a Test Suite are ones using:

-

@IncludeTags({ }) -

@ExcludeTags({ })

Example:

-

@IncludeTags({ "TestsTag1" }) , @ExcludeTags({ })→ will execute all test cases with the tagTestsTag1 -

@IncludeTags({ "TestsTag1" }) , @ExcludeTags({ "SlowTest" })→ will execute all test cases with tag"TestsTag1"although it will exclude from this list the test cases with the tag"SlowTest" -

@IncludeTags({ }) , @ExcludeTags({ "SlowTest" })→ It will exclude test cases with the tag"SlowTest"

Prior to mrchecker-core-module version 5.6.2.1:

Where:

-

@RunWith(WildcardPatternSuiteBF.class)- search for test files under/src/test/java -

@IncludeCategories({ TestsTag1.class })- search for all test files with the tag"TestsTag1.class" -

@ExcludeCategories({ })- exclude test files. In this example, there is no exclusion -

@SuiteClasses({ "**/*Test.class" })- search only test files, where the file name ends with"<anyChar/s>Test.class" -

public class TS_Tag1- the name of the Test Suite is "TS_Tag1"

Most commonly used filters to build Test Suite are ones using:

-

@IncludeCategories({ }) -

@ExcludeCategories({ })

Example:

-

@IncludeCategories({ TestsTag1.class }) , @ExcludeCategories({ })→ will execute all test cases with the tagTestsTag1.class -

@IncludeCategories({ TestsTag1.class }) , @ExcludeCategories({ SlowTest.class })→ will execute all test cases with the tag"TestsTag1.class"although it will exclude from this list the test cases with the tag"SlowTest.class" -

@IncludeCategories({ }) , @ExcludeCategories({ SlowTest.class })→ will execute all test cases from/src/test/java, although it will exclude from this list the test cases with the tag"SlowTest.class"

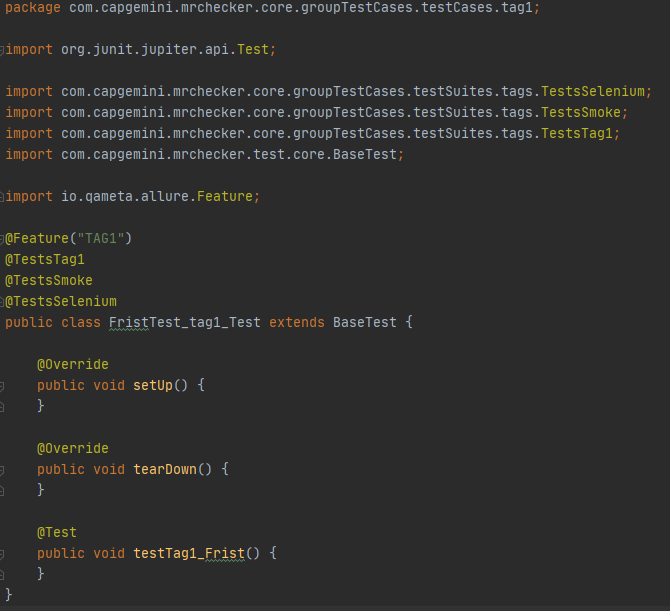

Structure of Test Case

Since mrchecker-core-module version 5.6.2.1:

Where:

-

@TestsTag1, @TestsSmoke, @TestsSelenium- list of tags assigned to this test case -"TestsTag1, TestsSmoke, TestSelenium"annotations -

public class FristTest_tag1_Test- the name of the test case is"FristTest_tag1_Test"

Prior to mrchecker-core-module version 5.6.2.1:

Where:

-

@Category({ TestsTag1.class, TestsSmoke.class, TestSelenium.class })- list of tags / categories assigned to this test case -"TestsTag1.class, TestsSmoke.class, TestSelenium.class" -

public class FristTest_tag1_Test- the name of the test case is"FristTest_tag1_Test"

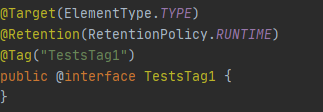

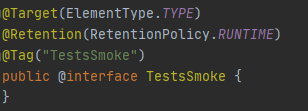

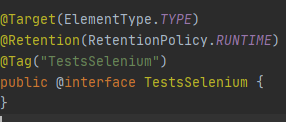

Structure of Tags / Categories

Since mrchecker-core-module version 5.6.2.1:

Tag name: TestsTag1 annotation

Tag name: TestsSmoke annotation

Tag name: TestSelenium annotation

Prior to mrchecker-core-module version 5.6.2.1:

Tag name: TestsTag1.class

Tag name: TestsSmoke.class

Tag name: TestSelenium.class

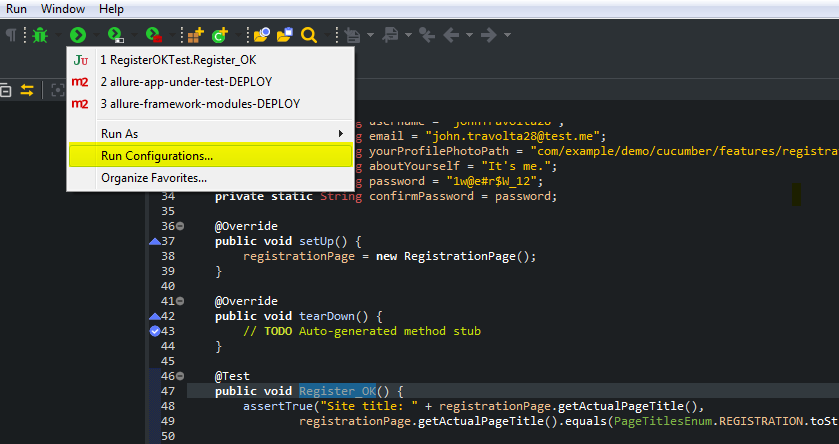

How to run Test Suite

To run a Test Suite you perform the same steps as you do to run a test case

Command line

Since mrchecker-core-module version 5.6.2.1:

JUnit5 disallows running suite classes from maven. Use -Dgroups=Tag1,Tag2 and -DexcludeGroups=Tag4,Tag5 to create test suites in maven.

mvn test site -Dgroups=TestsTag1

Prior to mrchecker-core-module version 5.6.2.1:

mvn test site -Dtest=TS_Tag1

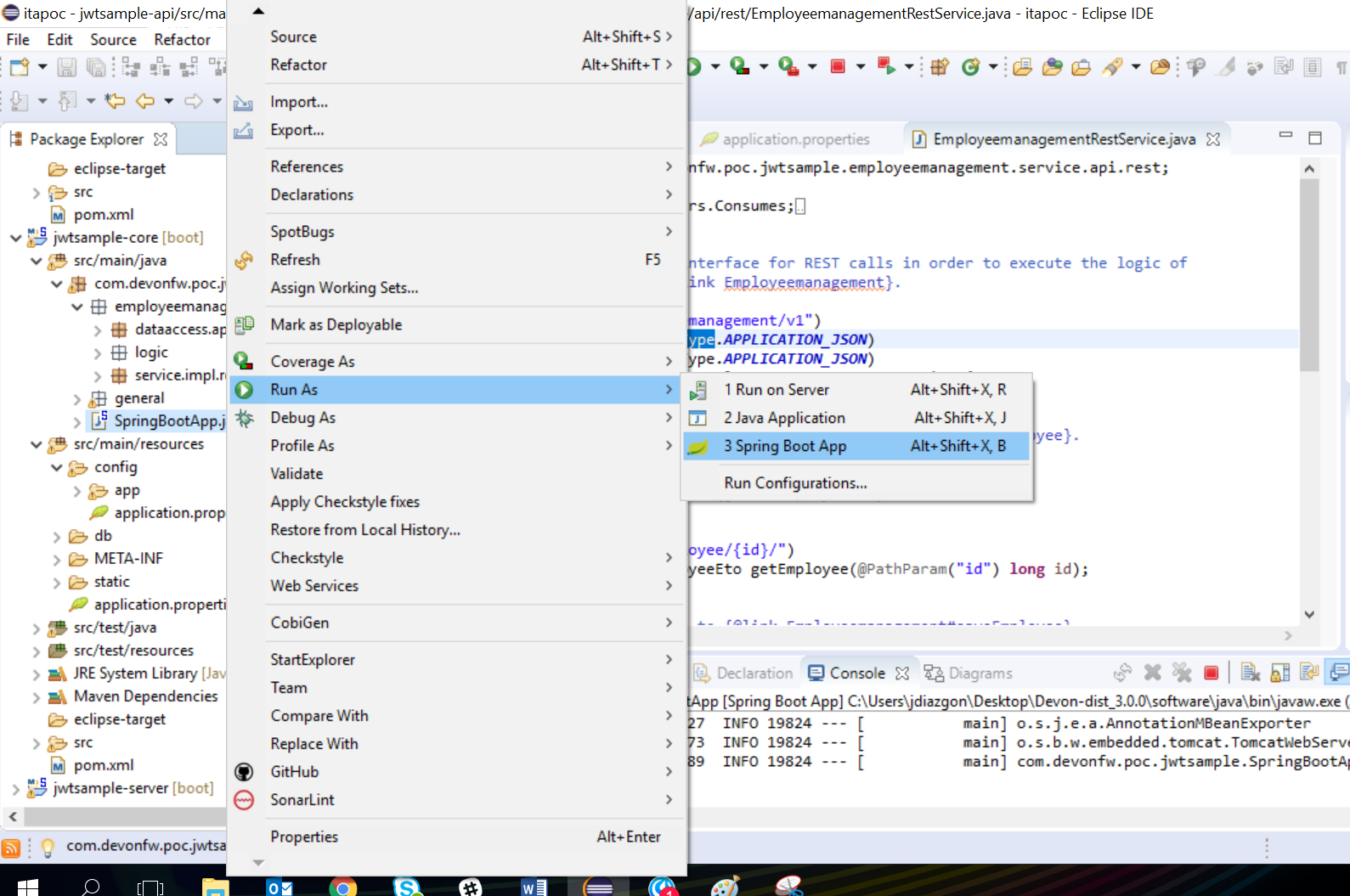

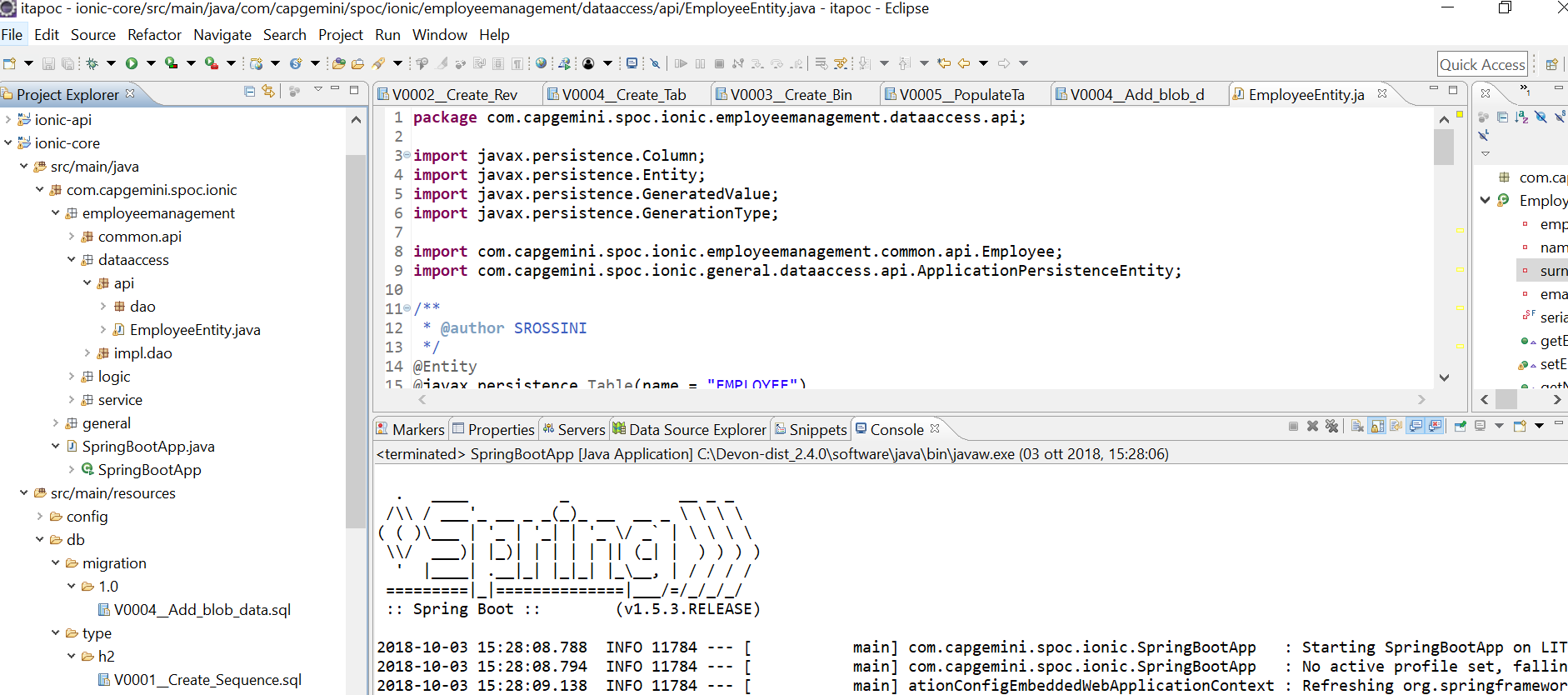

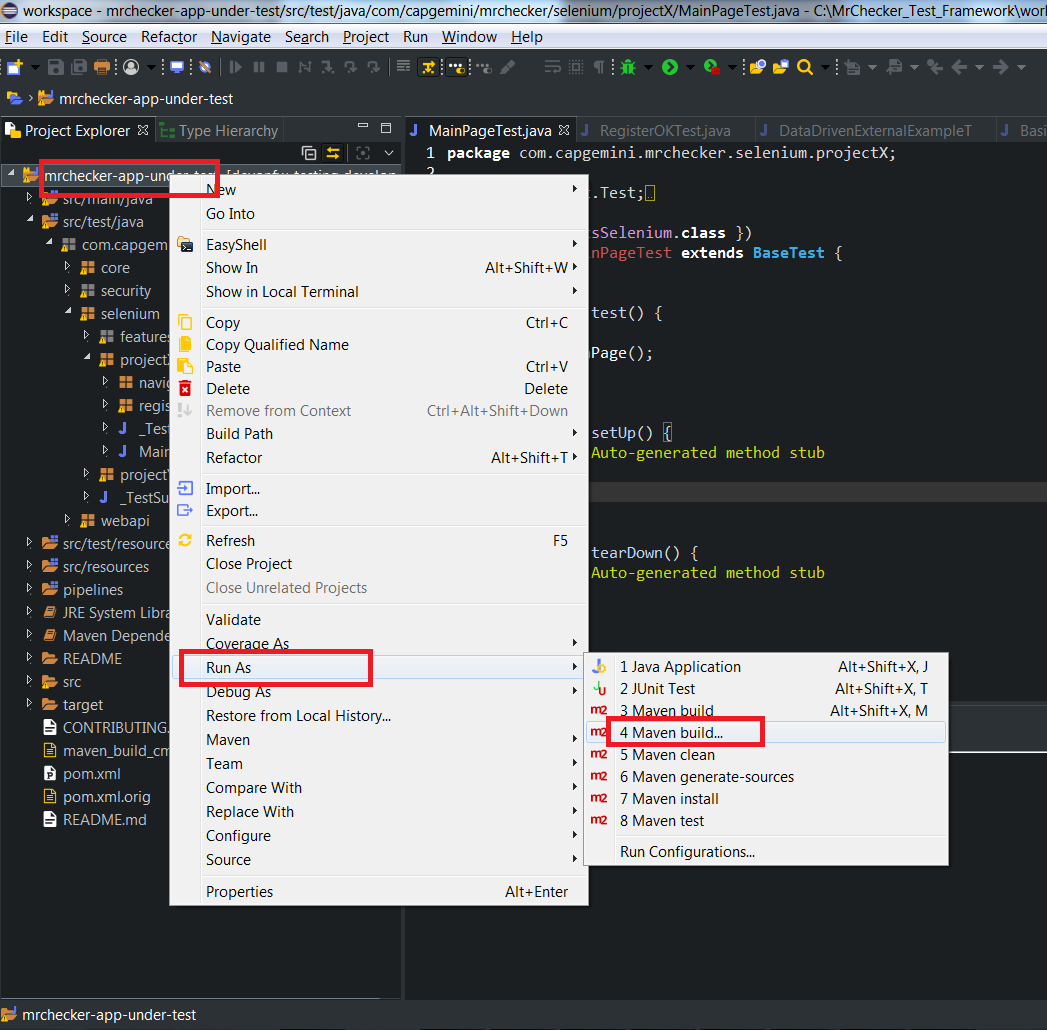

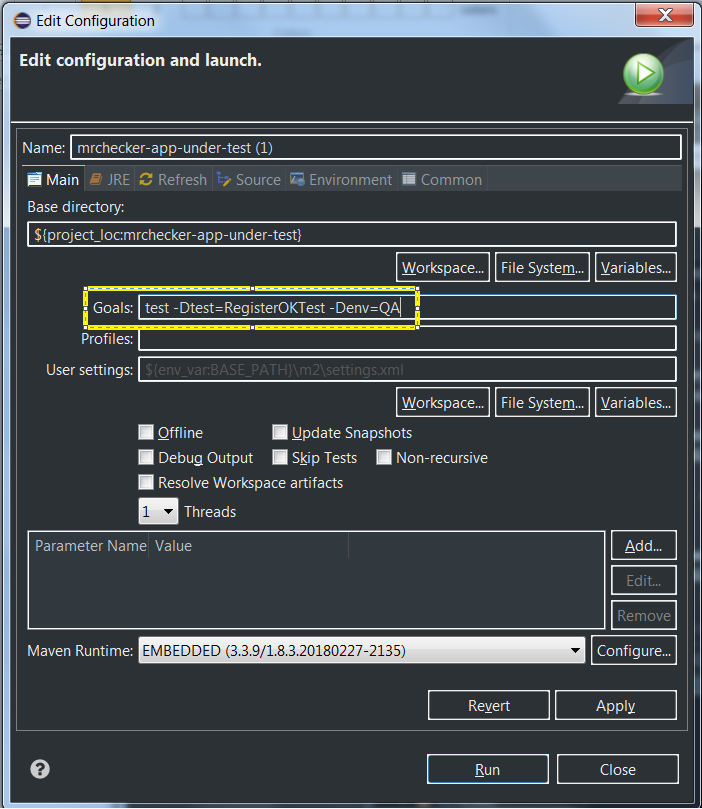

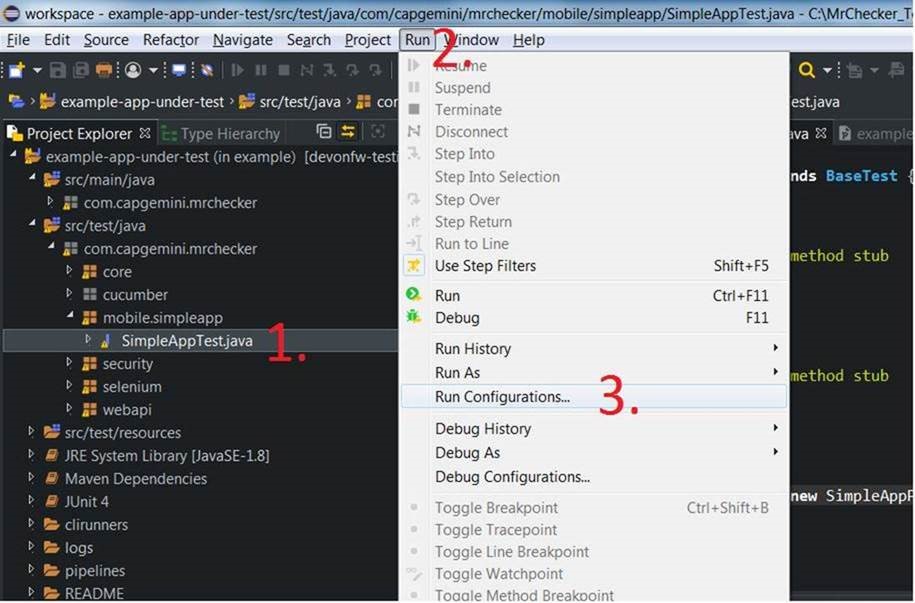

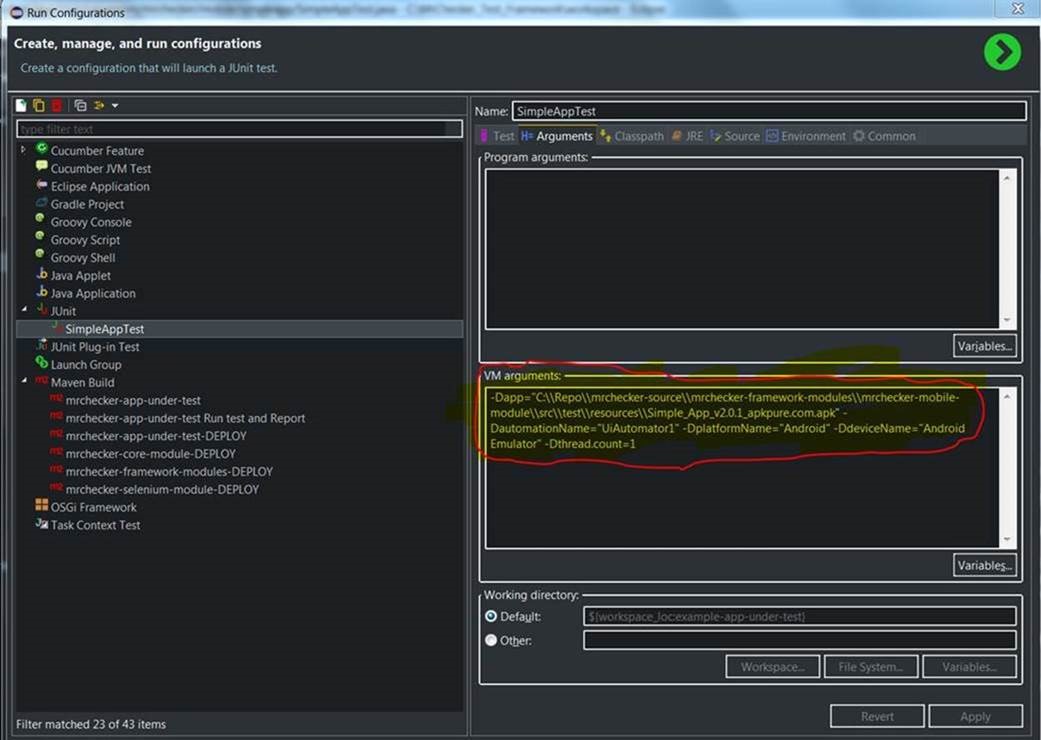

Eclipse

Data driven approach

Data driven approach - External data driven

External data driven - Data as external file injected in test case

Test case - Categorize functionality and severity

You can find more information about data driven here and here

There are a few ways to define parameters for tests.

Internal Data driven approach

Data as part of test case

The different means to pass in parameters are shown below.

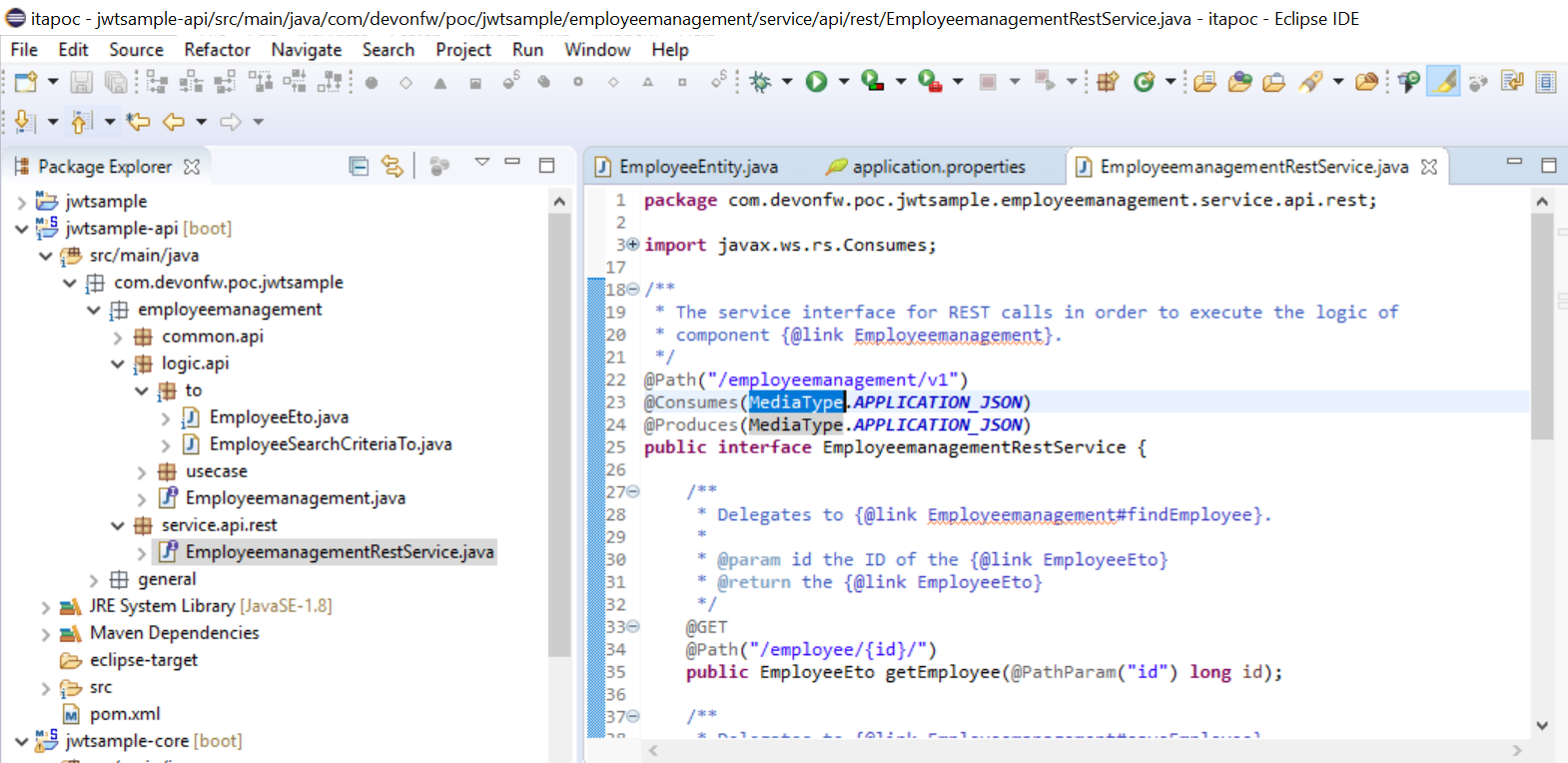

Since mrchecker-core-module version 5.6.2.1

Static methods are used to provide the parameters.

@ParameterizedTest

@MethodSource("argumentsStream")

OR

@ParameterizedTest

@MethodSource("arrayStream")

In the first case the arguments are directly mapped to the test method parameters. In the second case the array is passed as the argument.

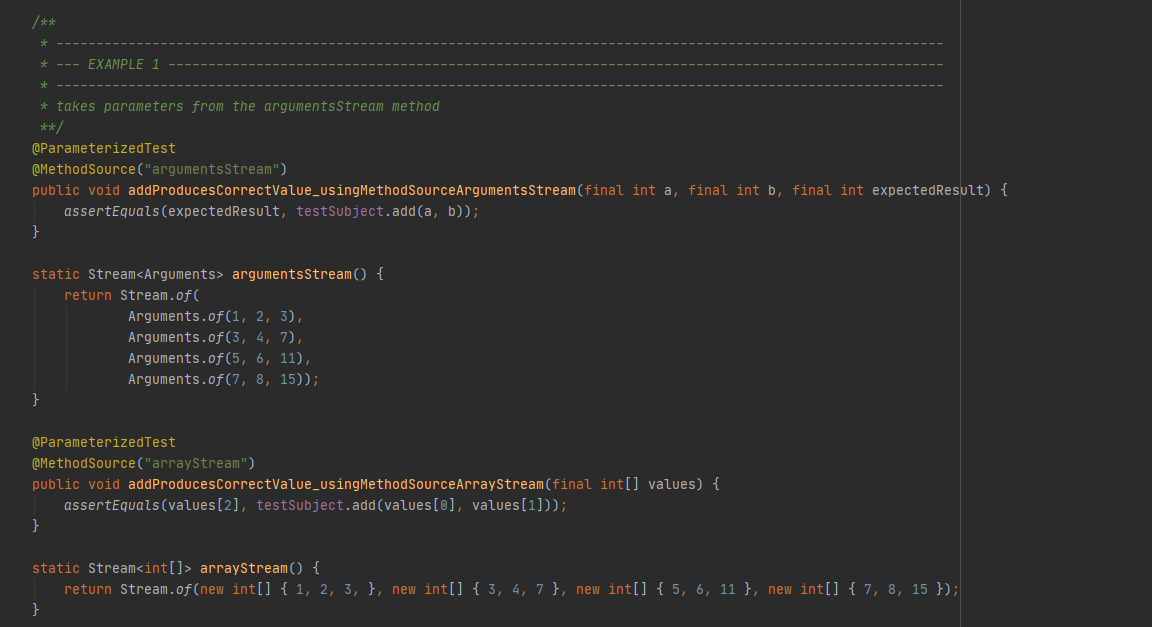

@ParameterizedTest

@MethodSource("com.capgemini.mrchecker.core.datadriven.MyContainsTestProvider#provideContainsTrueParameters")

Prior to mrchecker-core-module version 5.6.2.1

Parameters that are passed into tests using the @Parameters annotation must be _Object[]_s

@Parameters({"1, 2, 3", "3, 4, 7", "5, 6, 11", "7, 8, 15"})

The parameters must be primitive objects such as integers, strings, or booleans. Each set of parameters is contained within a single string and will be parsed to their correct values as defined by the test method’s signature.

@Parameters(method = "addParameters")

A separate method can be defined and referred to for parameters. This method must return an Object[] and can contain normal objects.

@Parameters(source = MyContainsTestProvider.class)

A separate class can be used to define parameters for the test. This test must contain at least one static method that returns an Object[], and its name must be prefixed with provide. The class could also contain multiple methods that provide parameters to the test, as long as they also meet the required criteria.

External Data Driven

Data as external file injected in test case

Since mrchecker-core-module version 5.6.2.1

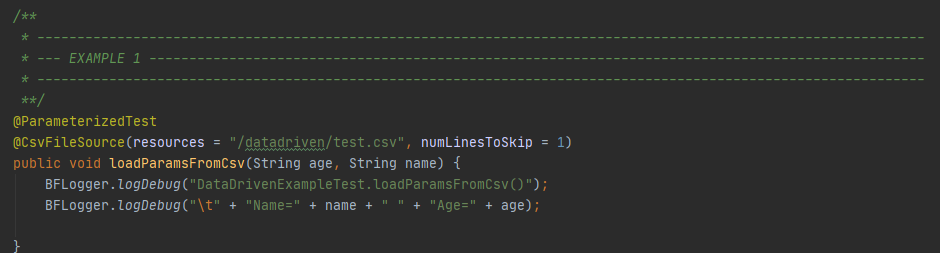

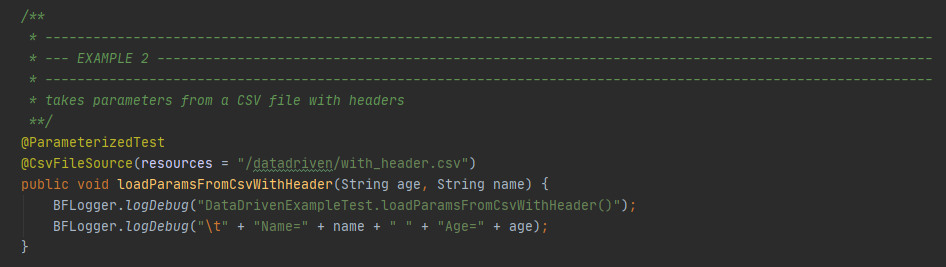

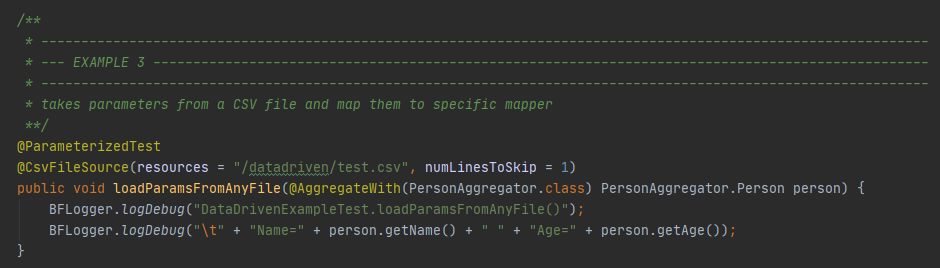

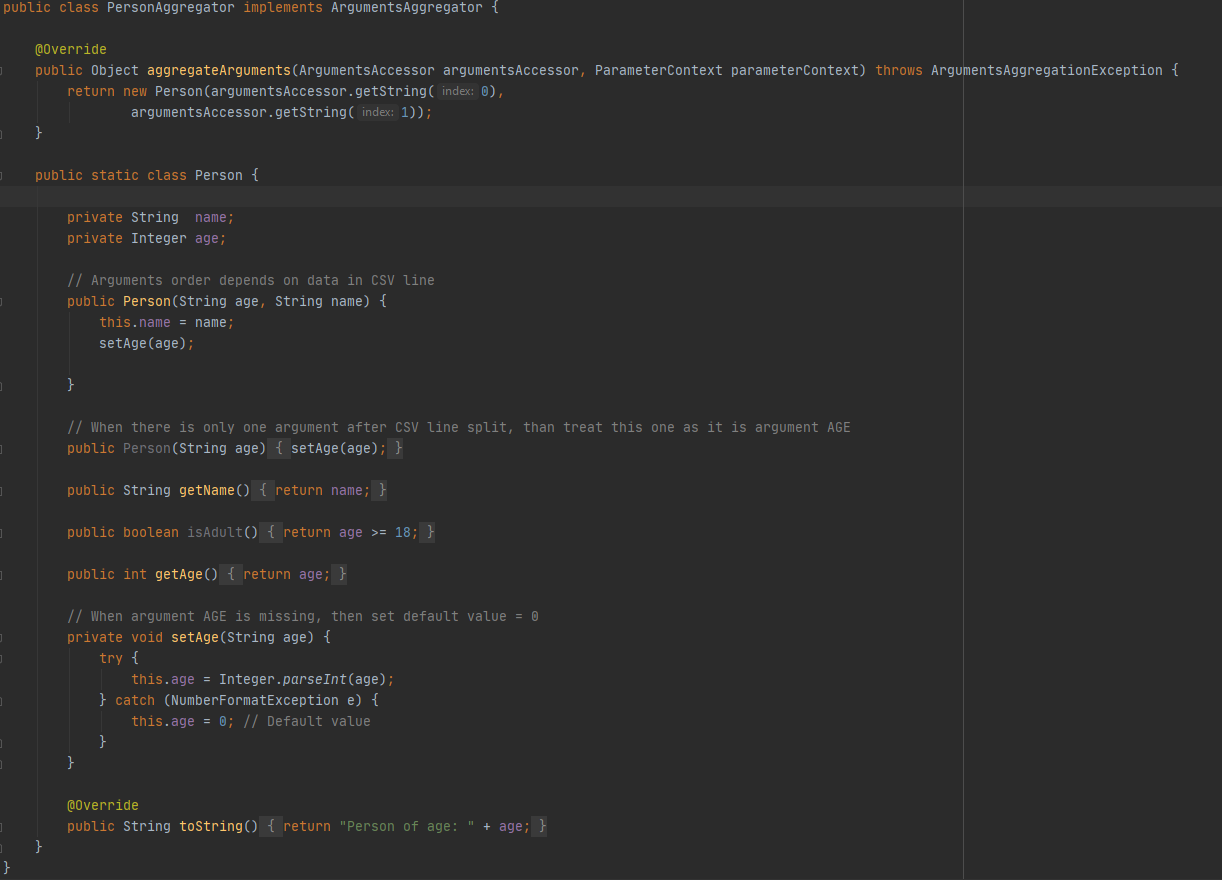

Tests use the annotation @CsvFileSource to inject CSVs file.

@CsvFileSource(resources = "/datadriven/test.csv", numLinesToSkip = 1)

A CSV can also be used to contain the parameters for the tests. It is pretty simple to set up, as it’s just a comma-separated list.

and CSV file structure

and CSV file structure

and Mapper implementation

Prior to mrchecker-core-module version 5.6.2.1

Tests use the annotation @FileParameters to inject CSVs file.

@FileParameters("src/test/resources/datadriven/test.csv")

A CSV can also be used to contain the parameters for the tests. It is pretty simple to set up, as it’s just a comma-separated list.

and CSV file structure

and CSV file structure

and Mapper implementation

What is "Parallel test execution" ?

Parallel test execution means many "Test Classes" can run simultaneously.

"Test Class", as this is a Junit Test class, it can have one or more test cases - "Test case methods"

How many parallel test classes can run simultaneously?

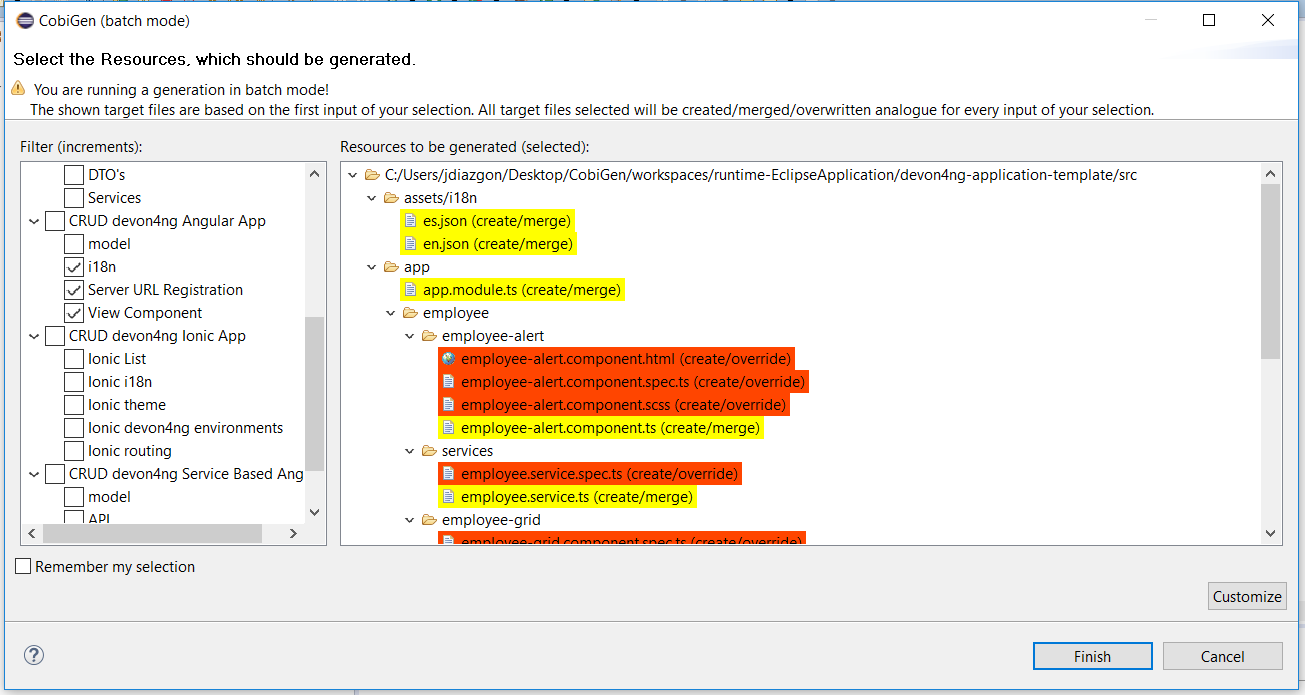

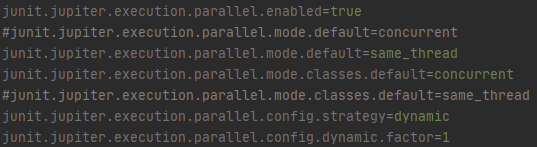

Since mrchecker-core-module version 5.6.2.1

JUnit5 supports parallelism natively. The feature is configured using a property file located at src\test\resources\junit-platform.properties.

As per default configuration, concurrent test execution is set to run test classes in parallel using the thread count equal to a number of your CPUs.

Visit JUnit5 site to learn more about parallel test execution.

Prior to mrchecker-core-module version 5.6.2.1

By default, number of parallel test classes is set to 8.

It can be updated as you please, on demand, by command line:

mvn test site -Dtest=TS_Tag1 -Dthread.count=16

-Dthread.count=16 - increase number of parallel Test Class execution to 16.

Overview

Cucumber / Selenium

Business and IT don’t always understand each other. Very often misunderstandings between business and IT result in the costly failure of IT projects. With this in mind, Cucumber was developed as a tool to support human collaboration between business and IT.

Cucumber uses executable specifications to encourage a close collaboration. This helps teams to keep the business goal in mind at all times. With Cucumber you can merge specification and test documentation into one cohesive whole, allowing your team to maintain one single source of truth. Because these executable specifications are automatically tested by Cucumber, your single source of truth is always up-to-date.

Cucumber supports testers when designing test cases. To automate these test cases, several languages can be used. Cucumber also works well with Browser Automation tools such as Selenium Webdriver.

Selenium automates browsers and is used for automating web applications for testing purposes. Selenium offers testers and developers full access to the properties of objects and the underlying tests, via a scripting environment and integrated debugging options.

Selenium consists of many parts. If you want to create robust, browser-based regression automation suites and tests, Selenium Webdriver is most appropriate. With Selenium Webdriver you can also scale and distribute scripts across many environments.

Those familiar with Behavior Driven Development (BDD) recognize Cucumber as an excellent open source tool that supports this practice.

With Cucumber / Selenium you can automate at the UI level. Automation at the unit or API level can also be implemented using Cucumber. This means all tests, regardless of the level at which they are implemented, can be implemented in one tool.

Many teams seem to prefer UI level automation, despite huge cost of maintaining UI level tests compared to the cost of maintaining API or unit tests. To lessen the maintenance of UI testing, when designing UI level functional tests, you can try describing the test and the automation at three levels: business rule, UI workflow, technical implementation.

When using Cucumber combined with Selenium, you can implement these three levels for better maintenance.

Executable specifications can and should be written before the functionality is implemented. By starting early, teams get most return on investment from their test automation.

Cucumber and Selenium are both open source tools with a large community, online resources and mailing lists.

How to run cucumber tests in Mr.Checker

-

Run cucumber tests and generate Allure report. Please use this for Jenkins execution. Report is saved under ./target/site.

mvn clean -P cucumber test site

-

Run and generate report

mvn clean -P cucumber test site allure:report

-

Run cucumber tests, generate Allure report and start standalone report server

mvn clean -P cucumber test site allure:serve

Tooling

Cucumber supports over a dozen different software platforms. Every Cucumber implementation provides the same overall functionality, but they also have their own installation procedure and platform-specific functionality. See https://cucumber.io/docs for all Cucumber implementations and framework implementations.

Also, IDEs such as Intellij offer several plugins for Cucumber support.

Selenium has the support of some of the largest browser vendors who have taken (or are taking) steps to make Selenium a native part of their browser. It is also the core technology in countless other browser automation tools, APIs and frameworks.

Test automation in Cucumber starts with writing a feature file. A feature normally consists of several (test)scenarios and each scenario consists of several steps.

Feature: Refund item

Scenario: Jeff returns a faulty microwave

Given Jeff has bought a microwave for $100

And he has a receipt

When he returns the microwave

Then Jeff should be refunded $100

Above example shows a feature “Refund item” with one scenario “Jeff returns a faulty microwave”. The scenario consists of four steps each starting with a key word (Given, And, When, Then).

Next the steps are implemented. Assuming we use Java to implement the steps, the Java code will look something like this.

public class MyStepdefs \{

@Given("Jeff has bought a microwave for $(\d+)")

public void Jeff_has_bought_a_microwave_for(int amount) \{

// implementation can be plain java

// or selenium

driver.findElement(By.name("test")).sendKeys("This is an example\n");

driver.findElement(By.name("button")).click();// etc

}

}

Cucumber uses an annotation (highlighted) to match the step from the feature file with the function implementing the step in the Java class. The name of the class and the function can be as the developer sees fit. Selenium code can be used within the function to automate interaction with the browser.

There are several ways to run scenarios with Cucumber, for example the JUnit runner, a command line runner and several third party runners.

Cucumber can report results in several different formats, using formatter plugins

Cucumber executes your feature files. As shown in the example below, feature files in Gherkin are easy to read so they can be shared between IT and business. Data tables can be used to execute a scenario with different inputs.

Feature files are placed in a directory structure and together form a feature tree.

Tags can be used to group features based on all kinds of categories. Cucumber can include or exclude tests with certain tags when running the tests.

Cucumber can report results in several formats, using formatter plugins. Not supported option by Shared Services: The output from Cucumber can be used to present test results in Jenkins or Hudson depending of the preference of the project.

HOW IS Cucumber / Selenium USED AT Capgemini?

Cucumber and Selenium are chosen as one of Capgemini’s test automation industrial tools. We support the Java implementation of Cucumber and Selenium Webdriver. We can help with creating Cucumber, Selenium projects in Eclipse and IntelliJ.

In the context of industrialisation, Capgemini has developed a range of services to assist and support the projects in process and tools implementation.

In this context a team of experts assists projects using test automation.

The main services provided by the center of expertise are:

-

Advise on the feasibility of automation.

-

Support with installation.

-

Coaching teams in the use of BDD.

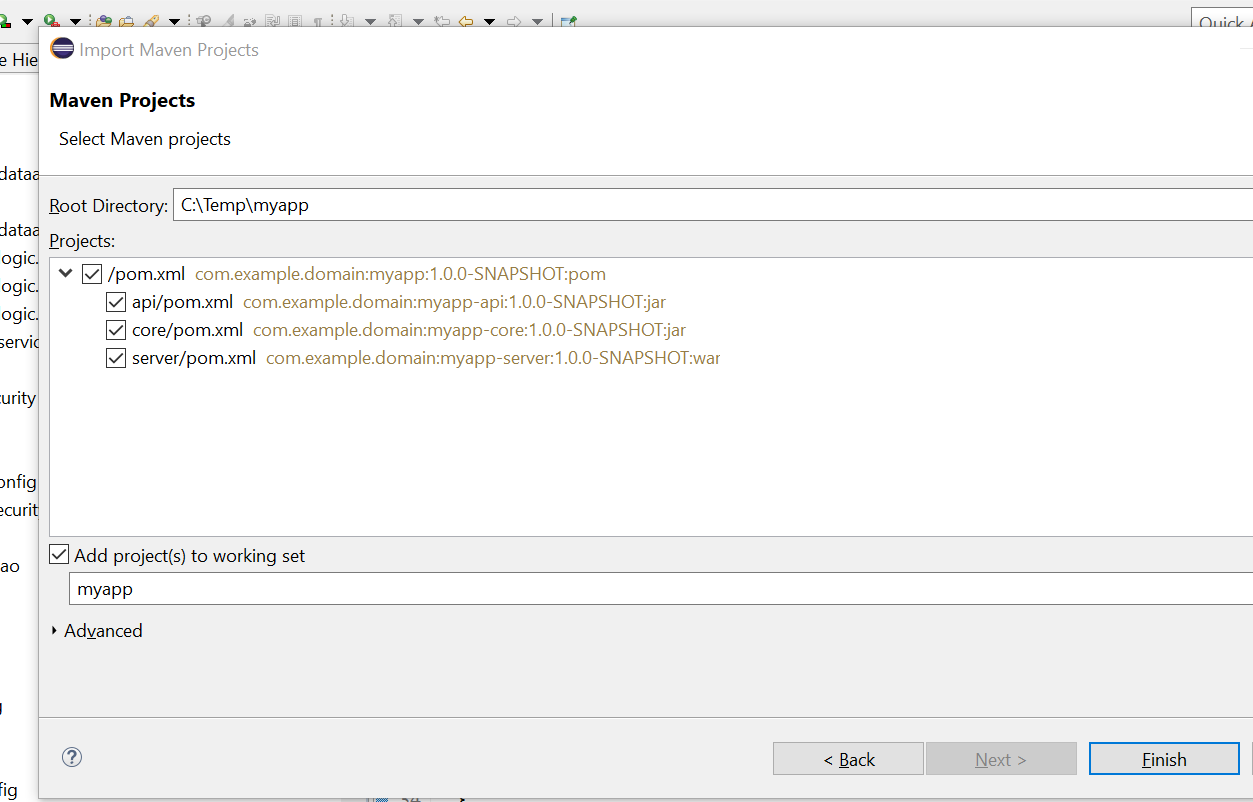

Run on independent Operation Systems

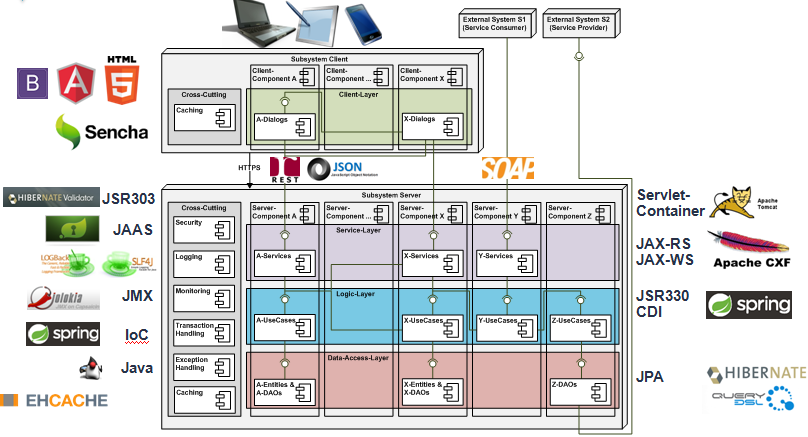

As E2E Allure test framework is build on top of:

-

Java 1.8

-

Maven 3.3

This guarantees portability to all operating systems.

E2E Allure test framework can run on OS:

-

Windows,

-

Linux and

-

Mac.

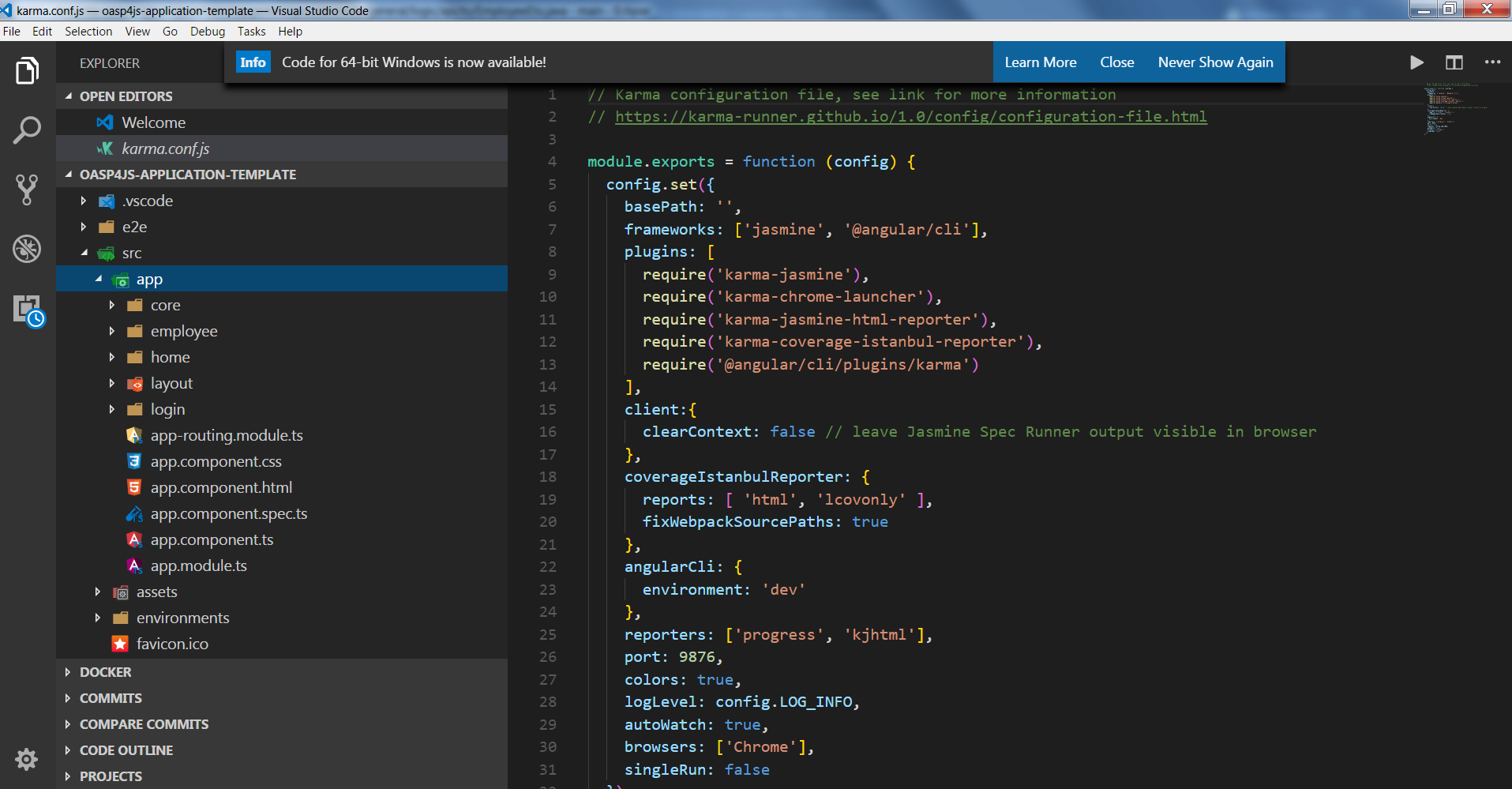

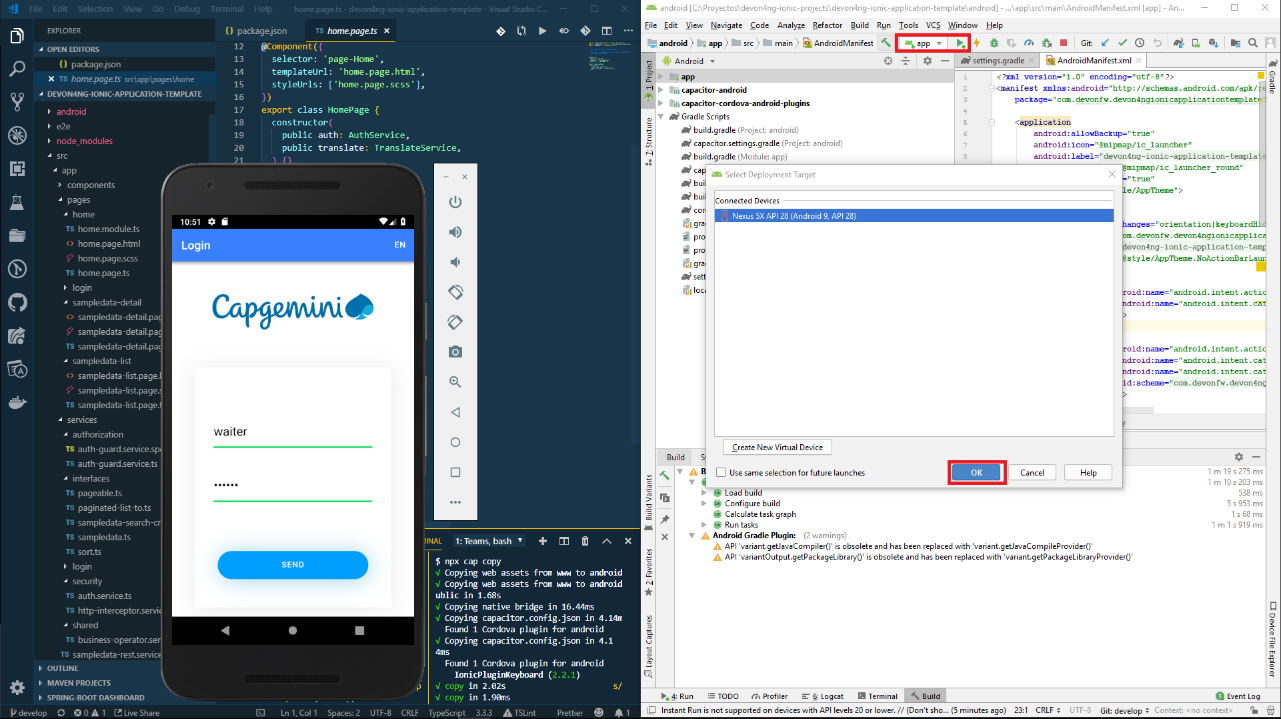

Test creation and maintenance in E2E Allure test framework can be done with any type of IDE:

-

Eclipse,

-

IntelliJ,

-

WebStorm,

-

Visual Studio Code,

-

many more that support Java + Maven.

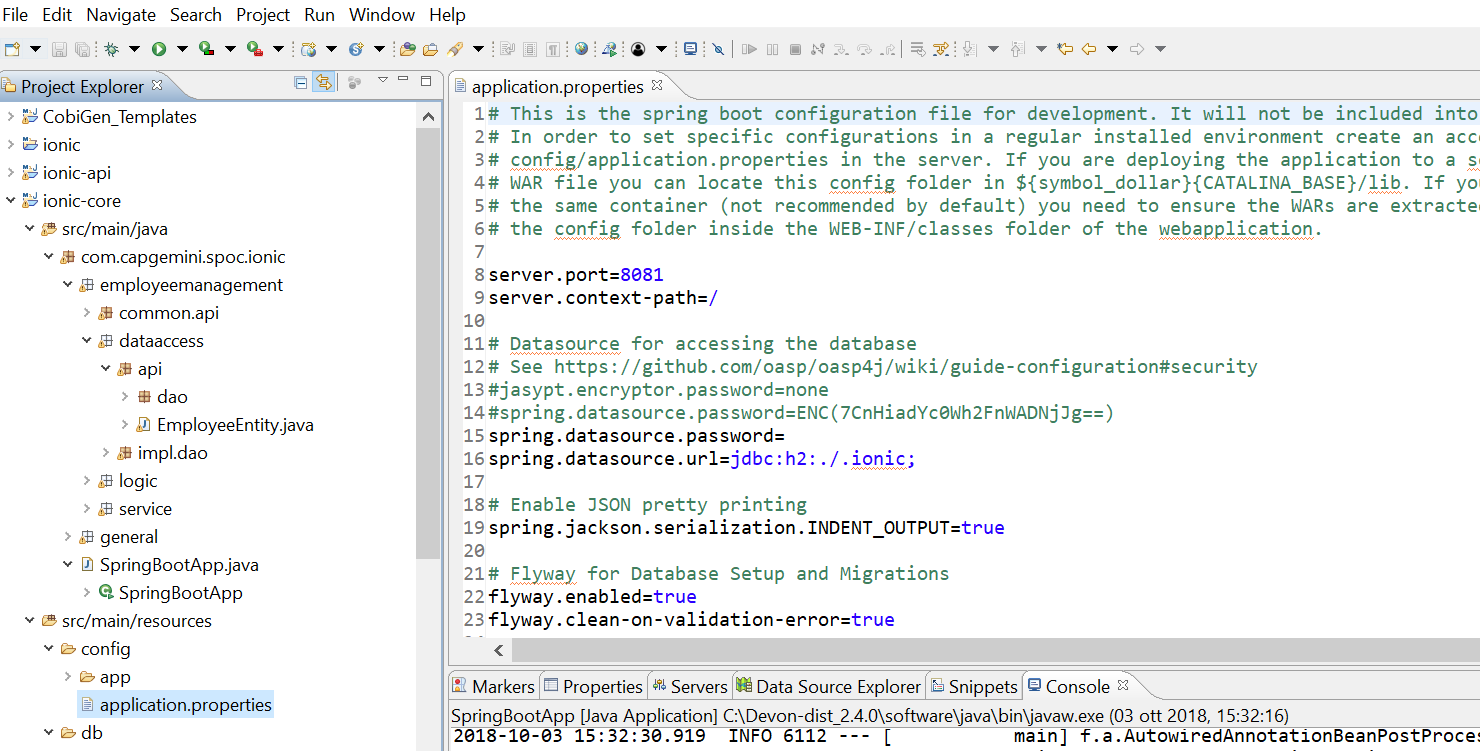

System under test environments

-

Quality assurance or QA is a way of preventing mistakes or defects in manufactured products and avoiding problems when delivering solutions or services to customers; which ISO 9000 defines as "part of quality management focused on providing confidence that quality requirements will be fulfilled".

-

System integration testing or SIT is a high-level software testing process in which testers verify that all related systems maintain data integrity and can operate in coordination with other systems in the same environment. The testing process ensures that all sub-components are integrated successfully to provide expected results.

-

Development or Dev testing is performed by the software developer or engineer during the construction phase of the software development life-cycle. Rather than replace traditional QA focuses, it augments it. Development testing aims to eliminate construction errors before code is promoted to QA; this strategy is intended to increase the quality of the resulting software as well as the efficiency of the overall development and QA process.

-

Prod If the customer accepts the product, it is deployed to a production environment, making it available to all users of the system.

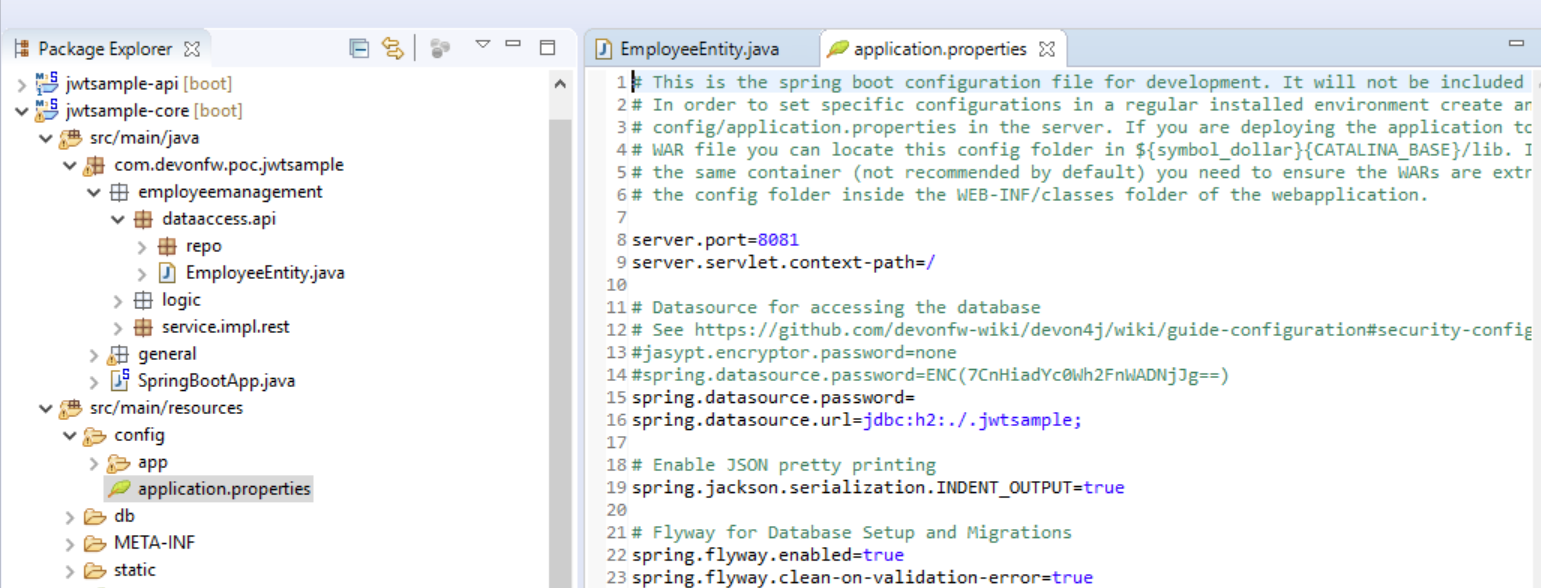

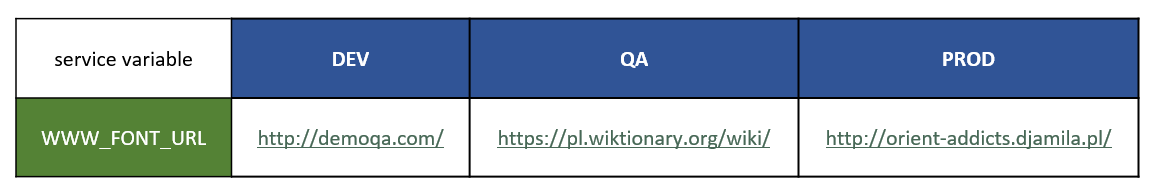

How to use system environment

In Page classes, when you load / start web, it is uncommon to save fixed main url.

Value flexibility is a must, when your web application under test, have different main url, dependence on environmnent (DEV, QA, SIT, …, PROD)

Instead of hard coded main url variable, you build your Page classe with dynamic variable.

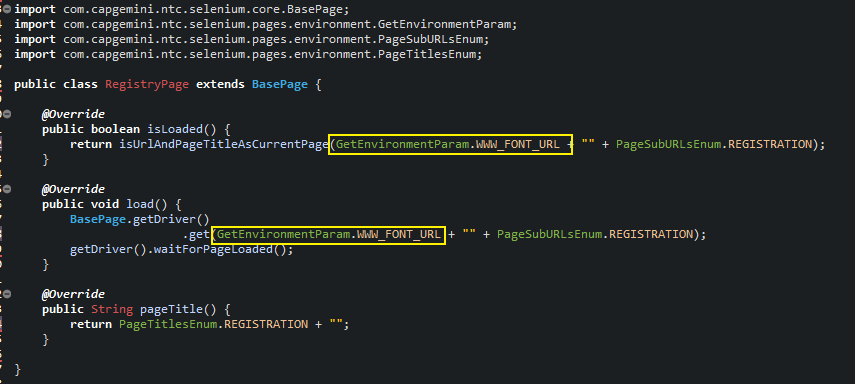

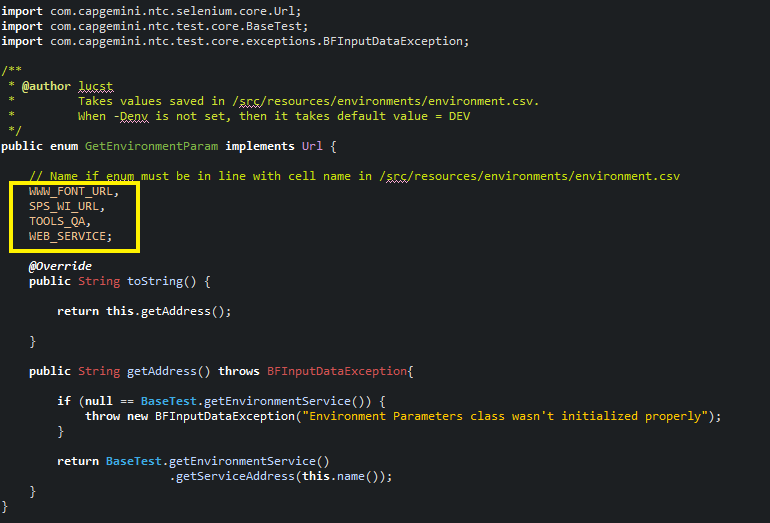

Example of dynamic variable GetEnvironmentParam.WWW_FONT_URL

How to create / update system environment

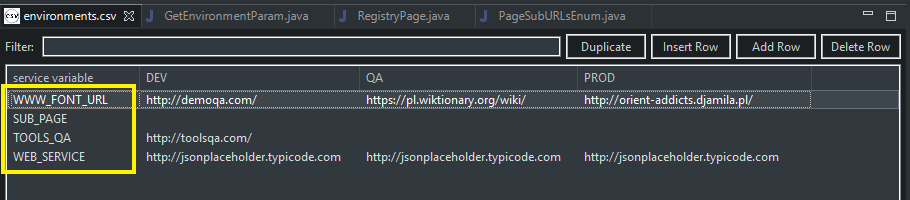

External file with variable values

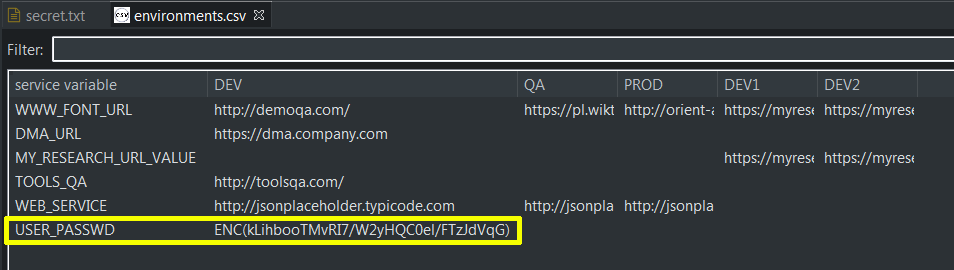

Dynamic variable values are stored under path mrchecker-app-under-test\src\resources\enviroments\environments.csv.

NOTE: As environments.csv is Comma-separated file, please be aware of any edition and then save it under Excel.

Encrypting sensitive data

Some types of data you might want to store as environment settings are sensitive in nature (e.g. passwords). You might not want to store them (at least not in their plaintext form) in your repository. To be able to encrypt sensitive data you need to do following:

-

Create a secret (long, random chain of characters) and store it under mrchecker-app-under-test\src\resources\secretData.txt. Example:

LhwbTm9V3FUbBO5Tt5PiTUEQrXGgWrDLCMthnzLKNy1zA5FVTFiTdHRQAyPRIGXmsAjPUPlJSoSLeSBM -

Exclude the file from being checked into the git repository by adding it to git.ignore. You will need to pass the file over a different channel among your teammates.

-

Encrypt the values before putting them into the environments.csv file by creating following script (put the script where your jasypt library resides, e.g. C:\MrChecker_Test_Framework\m2\repository\org\jasypt\jasypt\1.9.2):

@ECHO OFF set SCRIPT_NAME=encrypt.bat set EXECUTABLE_CLASS=org.jasypt.intf.cli.JasyptPBEStringEncryptionCLI set EXEC_CLASSPATH=jasypt-1.9.2.jar if "%JASYPT_CLASSPATH%" == "" goto computeclasspath set EXEC_CLASSPATH=%EXEC_CLASSPATH%;%JASYPT_CLASSPATH% :computeclasspath IF "%OS%" == "Windows_NT" setlocal ENABLEDELAYEDEXPANSION FOR %%c in (%~dp0..\lib\*.jar) DO set EXEC_CLASSPATH=!EXEC_CLASSPATH!;%%c IF "%OS%" == "Windows_NT" setlocal DISABLEDELAYEDEXPANSION set JAVA_EXECUTABLE=java if "%JAVA_HOME%" == "" goto execute set JAVA_EXECUTABLE="%JAVA_HOME%\bin\java" :execute %JAVA_EXECUTABLE% -classpath %EXEC_CLASSPATH% %EXECUTABLE_CLASS% %SCRIPT_NAME% %*

-

Encrypt the values by calling

.\encrypt.bat input=someinput password=secret ----ENVIRONMENT----------------- Runtime: Oracle Corporation Java HotSpot(TM) 64-Bit Server VM 25.111-b14 ----ARGUMENTS------------------- input: someinput password: secret ----OUTPUT---------------------- JN3nOFol2GMZoUxR5z2wI2qdipcNH1UD

-

Mark the value as encrypted by adding a prefix 'ENC(' and suffix ')' like:

ENC(JN3nOFol2GMZoUxR5z2wI2qdipcNH1UD)

Bridge between external file nad Page class

To map values from external file with Page class you ought to use class GetEnvironmentParam.

Therefore when you add new variable (row) in environments.csv you might need to add this variable to GetEnvironmentParam.

Run test case with system environment

To run test case with system environment, please use:

-

-Denv=<NameOfEnvironment>

-

<NameOfEnvironment> is taken as column name from file mrchecker-app-under-test\src\test\resources\enviroments\environments.csv

Command Line

mvn test site -Dtest=RegistryPageTest -Denv=DEV

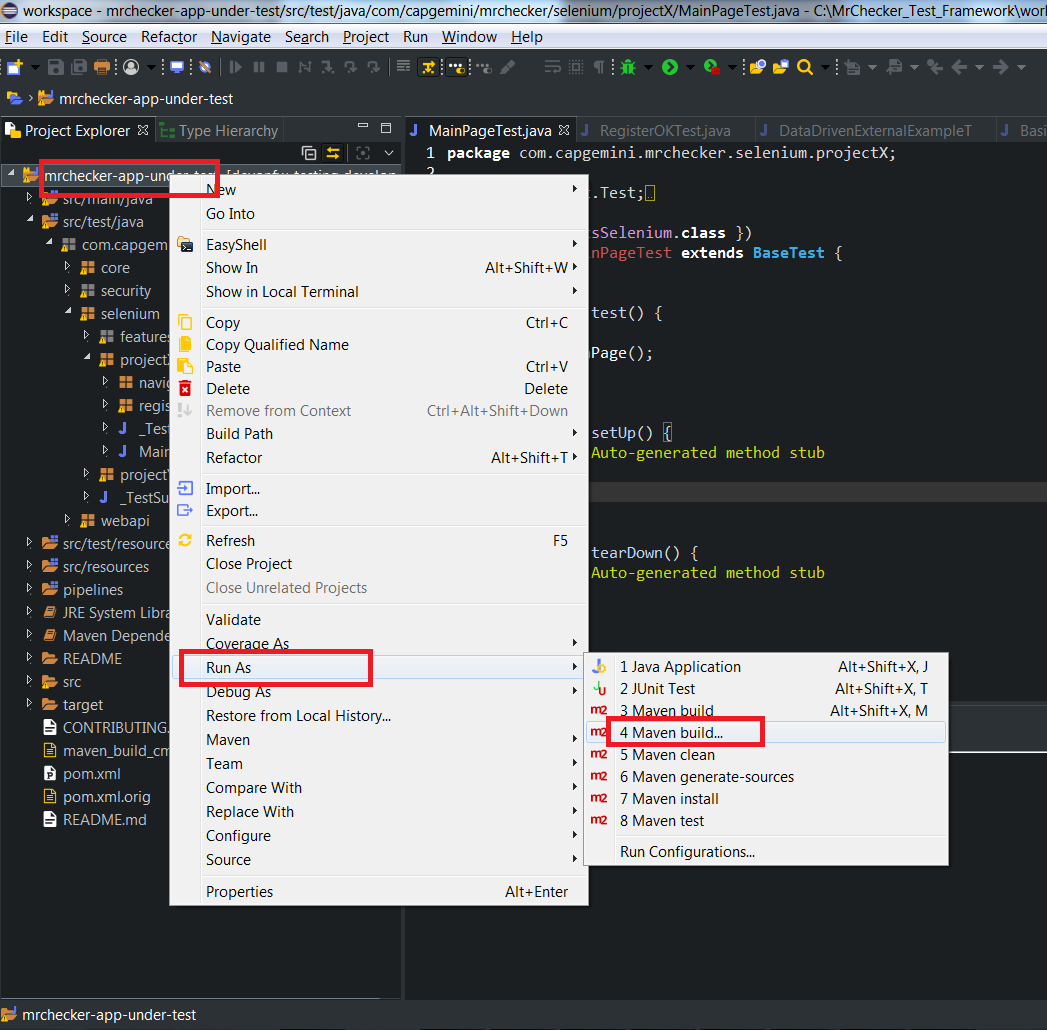

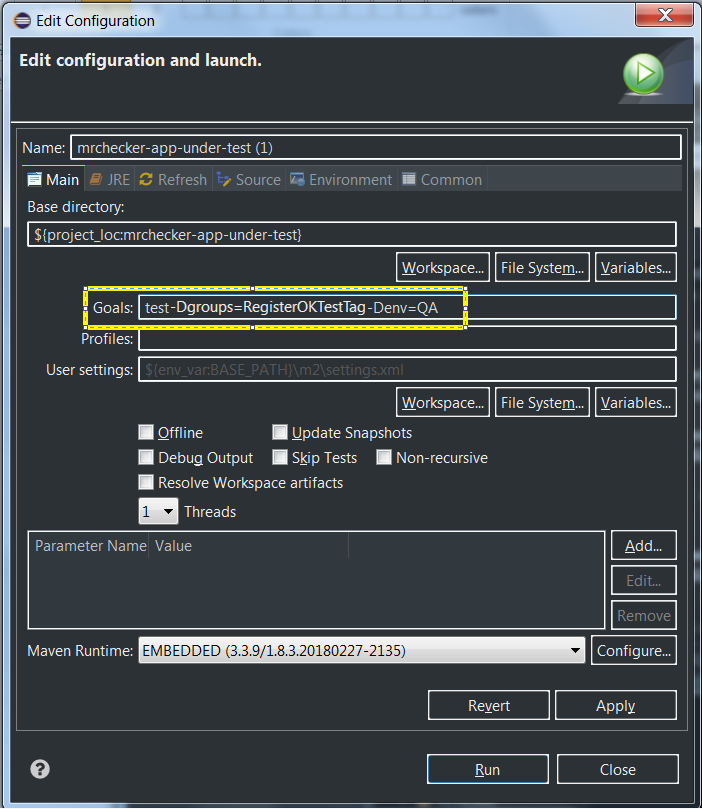

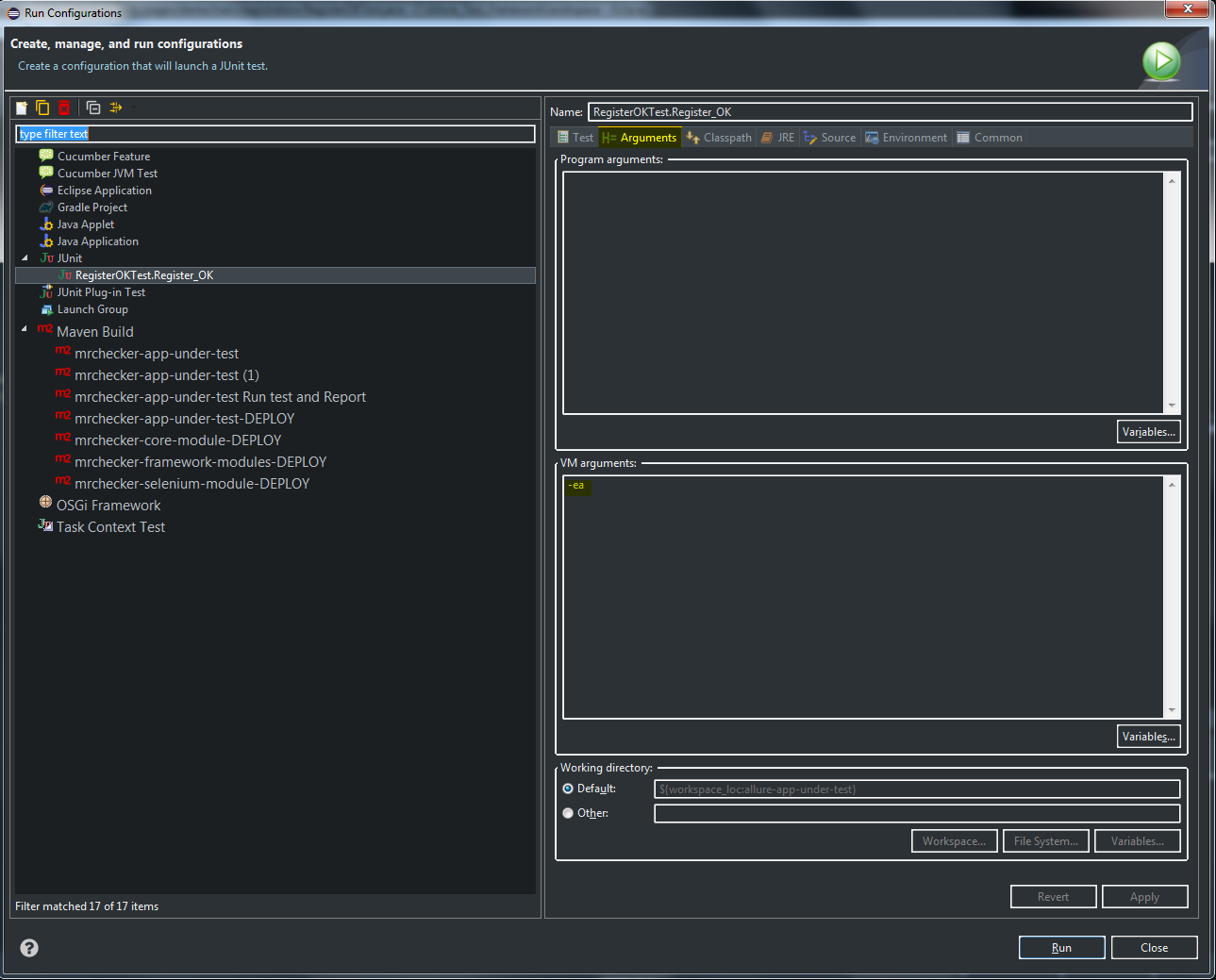

Eclipse

System under test environments

-

Quality assurance or QA is a way of preventing mistakes or defects in the manufactured products and avoiding problems when delivering solutions or services to customers which ISO 9000 defines as "part of quality management focused on providing confidence that quality requirements will be fulfilled".

-

System integration testing or SIT is a high-level software testing process in which testers verify that all related systems maintain data integrity and can operate in coordination with other systems in the same environment. The testing process ensures that all sub-components are integrated successfully to provide expected results.

-

Development or Dev testing is performed by the software developer or engineer during the construction phase of the software development life-cycle. Rather than replace traditional QA focuses, it augments it. Development testing aims to eliminate construction errors before code is promoted to QA; this strategy is intended to increase the quality of the resulting software as well as the efficiency of the overall development and QA process.

-

Prod If the customer accepts the product, it is deployed to a production environment, making it available to all users of the system.

How to use system environment

In Page classes, when you load / start web, it is uncommon to save fixed main url.

Value flexibility is a must, when your web application under test has different main url, depending on the environmnent (DEV, QA, SIT, …, PROD)

Instead of hard coded main url variable, you build your Page classes with dynamic variable.

An example of dynamic variable GetEnvironmentParam.WWW_FONT_URL

How to create / update system environment

External file with variable values

Dynamic variable values are stored under mrchecker-app-under-test\src\resources\enviroments\environments.csv.

NOTE: As environments.csv is a comma-separated file, please be careful while editing and then save it under Excel.

Encrypting sensitive data

Some types of data you might want to store as environment settings are sensitive in nature (e.g. passwords). You might not want to store them (at least not in their plaintext form) in your repository. To be able to encrypt sensitive data you need to do following:

-

Create a secret (long, random chain of characters) and store it under mrchecker-app-under-test\src\resources\secretData.txt. Example:

LhwbTm9V3FUbBO5Tt5PiTUEQrXGgWrDLCMthnzLKNy1zA5FVTFiTdHRQAyPRIGXmsAjPUPlJSoSLeSBM -

Exclude the file from being checked into the git repository by adding it to git.ignore. You will need to pass the file over a different channel among your teammates.

-

Encrypt the values before putting them into the environments.csv file by creating following script (put the script where your jasypt library resides, e.g. C:\MrChecker_Test_Framework\m2\repository\org\jasypt\jasypt\1.9.2):

@ECHO OFF set SCRIPT_NAME=encrypt.bat set EXECUTABLE_CLASS=org.jasypt.intf.cli.JasyptPBEStringEncryptionCLI set EXEC_CLASSPATH=jasypt-1.9.2.jar if "%JASYPT_CLASSPATH%" == "" goto computeclasspath set EXEC_CLASSPATH=%EXEC_CLASSPATH%;%JASYPT_CLASSPATH% :computeclasspath IF "%OS%" == "Windows_NT" setlocal ENABLEDELAYEDEXPANSION FOR %%c in (%~dp0..\lib\*.jar) DO set EXEC_CLASSPATH=!EXEC_CLASSPATH!;%%c IF "%OS%" == "Windows_NT" setlocal DISABLEDELAYEDEXPANSION set JAVA_EXECUTABLE=java if "%JAVA_HOME%" == "" goto execute set JAVA_EXECUTABLE="%JAVA_HOME%\bin\java" :execute %JAVA_EXECUTABLE% -classpath %EXEC_CLASSPATH% %EXECUTABLE_CLASS% %SCRIPT_NAME% %*

-

Encrypt the values by calling

.\encrypt.bat input=someinput password=secret ----ENVIRONMENT----------------- Runtime: Oracle Corporation Java HotSpot(TM) 64-Bit Server VM 25.111-b14 ----ARGUMENTS------------------- input: someinput password: secret ----OUTPUT---------------------- JN3nOFol2GMZoUxR5z2wI2qdipcNH1UD

-

Mark the value as encrypted by adding a prefix 'ENC(' and suffix ')' like:

ENC(JN3nOFol2GMZoUxR5z2wI2qdipcNH1UD)

Bridge between external file nad Page class

To map values from external file with Page class you ought to use class GetEnvironmentParam

Therefore when you add new variable (row) in environments.csv you might need to add this variable to GetEnvironmentParam.

Run test case with system environment

To run test case with system environment, please use: * -Denv=\<NameOfEnvironment\> * \<NameOfEnvironment\> is taken as column name from file mrchecker-app-under-test\src\test\resources\enviroments\environments.csv

Since mrchecker-core-module version 5.6.2.1

Command Line

mvn test site -Dgroups=RegistryPageTestTag -Denv=DEV

Eclipse

Prior to mrchecker-core-module version 5.6.2.1

Command Line

mvn test site -Dtest=RegistryPageTest -Denv=DEV

Eclipse

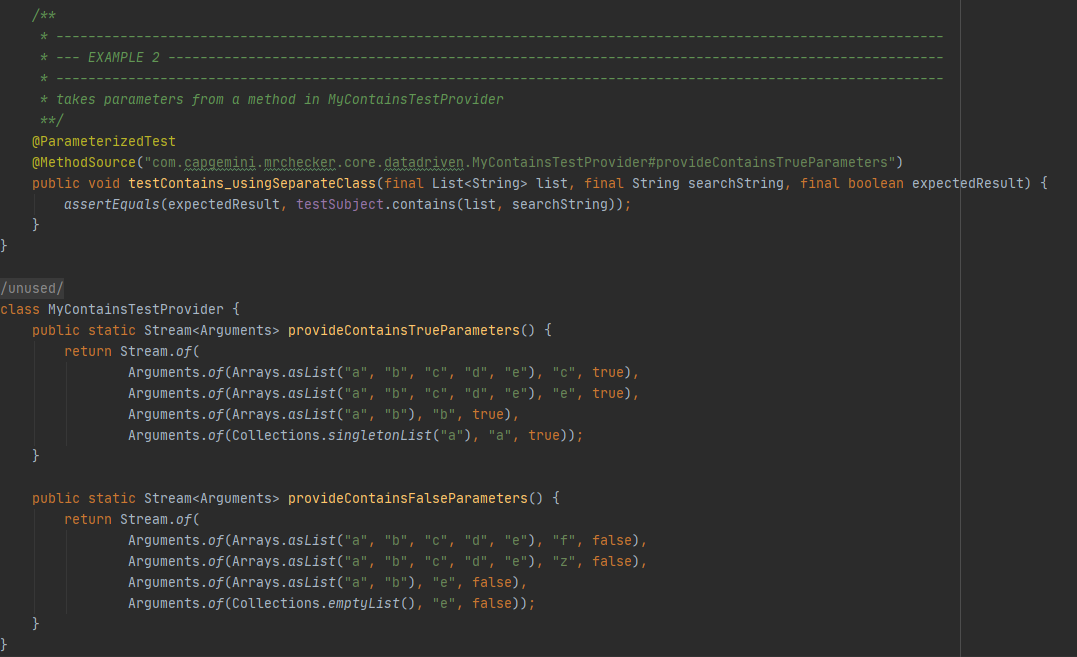

Selenium Module

Selenium Test Module

What is MrChecker E2E Selenium Test Module

Selenium Structure

Framework Features

-

Construction of Framework Page Class

-

Every Page class must extend BasePage

-

What are

isLoaded(),load()andpageTitle()for -

How to create selector variable -

private static final By ButtonOkSelector = By.Css(…) -

How to prepare an 'everlasting' selector

-

Method/action naming convention

-

Why we should use

findElementDynamic()andfindElementQuietly()instead of classic SeleniumfindElement -

List of well-rounded groups of user friendly actions (

ElementButton,ElementCheckbox,ElementInput, etc.) -

Verification points of well-defined Page classes and Test classes

-

-

Run on different browsers: Chrome, Firefox, IE, Safari, Edge

-

Run with full range of resolution (mobile, desktop): Testing responsible Design Webpage

How to start?

Read: My first Selenium Test

Selenium Best Practices

Selenium UFT Comparison

Selenium Structure

What is Selenium

Selenium is a framework for testing browser applications. The test automation supports:

-

Frequent regression testing

-

Repeating test case executions

-

Documentation of test cases

-

Finding defects

-

Multiple Browsers

The Selenium testing framework consists of multiple tools:

-

Selenium IDE

The Selenium Integrated Development Environment is a prototyping tool for building test scripts. It is a Firefox Plugin and provides an easy-to-use interface for developing test cases. Additionally, Selenium IDE contains a recording feature, that allows the user to record user inputs that can be automatically re-executed in future.

-

Selenium 1

Selenium 1, also known as Selenium RC, commands a Selenium Server to launch and kill browsers, interpreting the Selenese commands passed from the test program. The Server acts as an HTTP proxy. This tool is deprecated.

-

Selenium 2

Selenium 2, also known as Selenium WebDriver, is designed to supply a well-designed, object-oriented API that provides improved support for modern advanced web-app testing problems.

-

Selenium 3.0

The major change in Selenium 3.0 is removing the original Selenium Core implementation and replacing it with one backed by WebDriver. There is now a W3C specification for browser automation, based on the Open Source WebDriver.

-

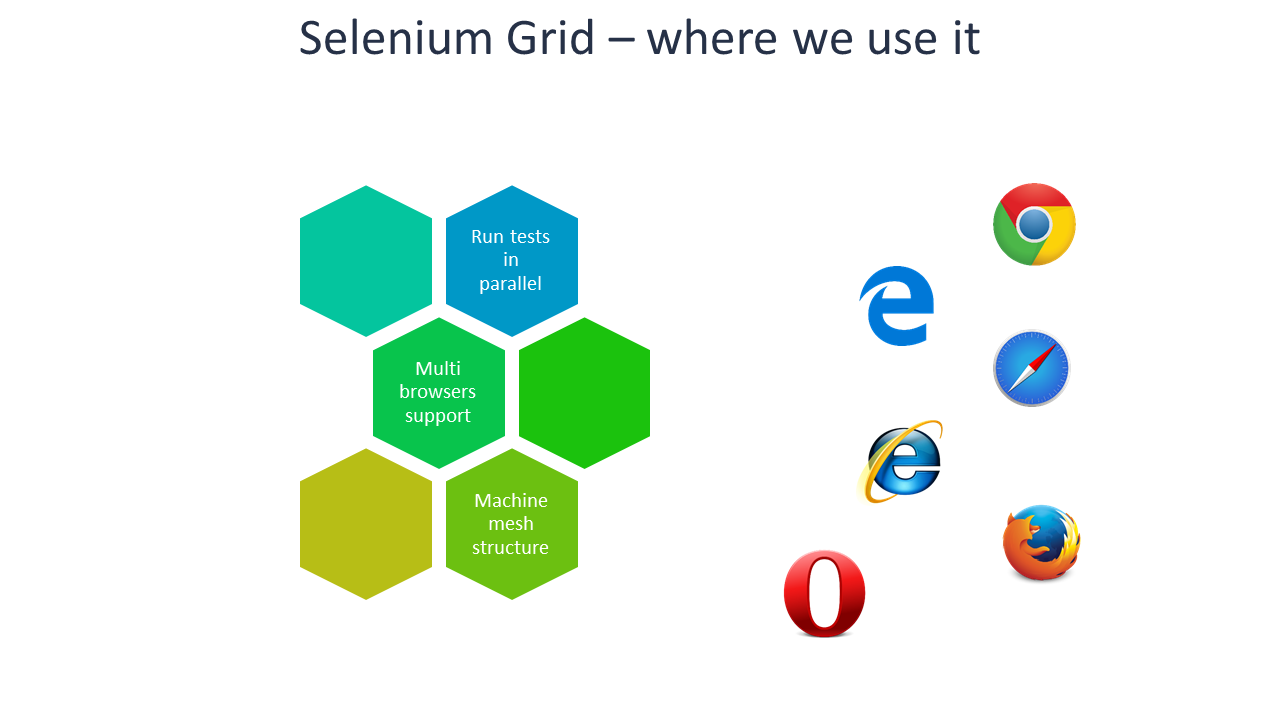

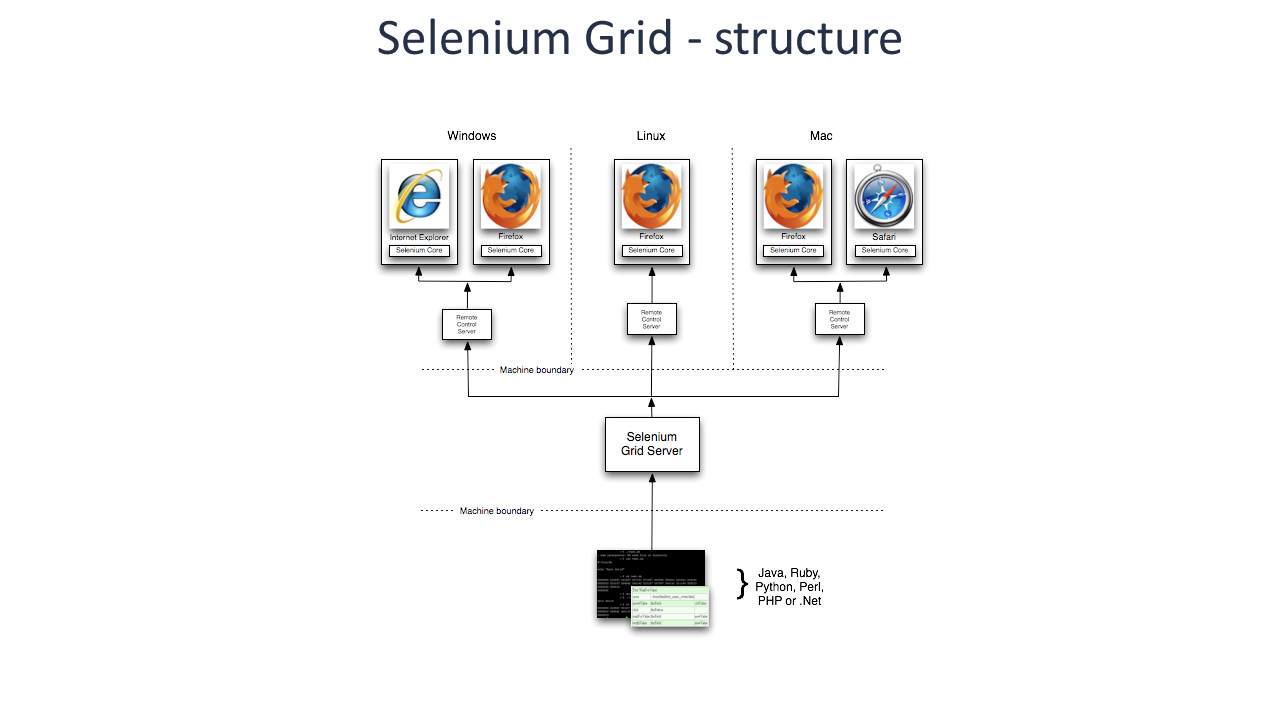

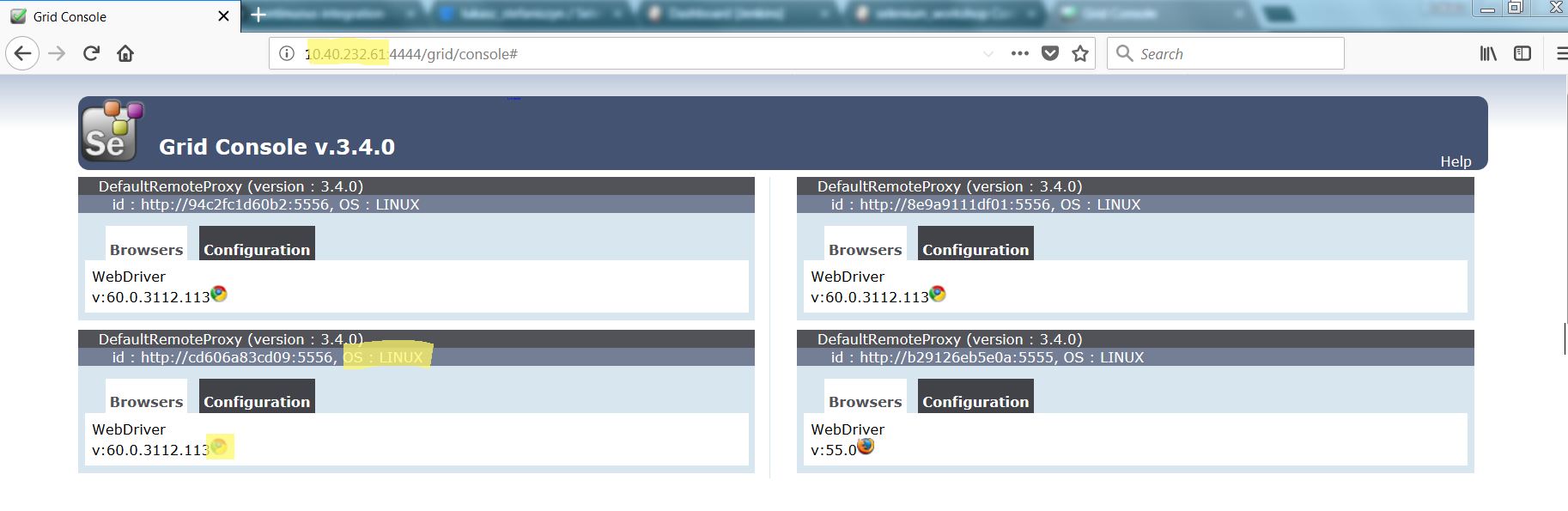

Selenium Grid

Selenium Grid allows the scaling of Selenium RC test cases, that must be run in multiple and potentially variable environments. The tests can be run in parallel on different remote machines.

Selenium on the Production Line

More information on Selenium on the Production Line can be found here.

tl;dr

The Production Line has containers running Chrome and Firefox Selenium Nodes. The communication with these nodes is accomplished using Selenium Grid.

Having issues using Selenium on the Production Line? Check the Production Line issue list, maybe it’s a known issue that can be worked around.

What is WebDriver

On the one hand, it is a very convenient API for a programmer that allows for interaction with the browser, on the other hand it is a driver concept that enables this direct communication.

A tester, through their test script, can command WebDriver to perform certain actions on the WAUT on a certain browser. The way the user can command WebDriver to perform something is by using the client libraries or language bindings provided by WebDriver.

By using the language-binding client libraries, a tester can invoke browser-specific implementations of WebDriver, such as Firefox Driver, IE Driver, Opera Driver, and so on, to interact with the WAUT of the respective browser. These browser-specific implementations of WebDriver will work with the browser natively and execute commands from outside the browser to simulate exactly what the application user does.

After execution, WebDriver will send the test result back to the test script for developer’s analysis.

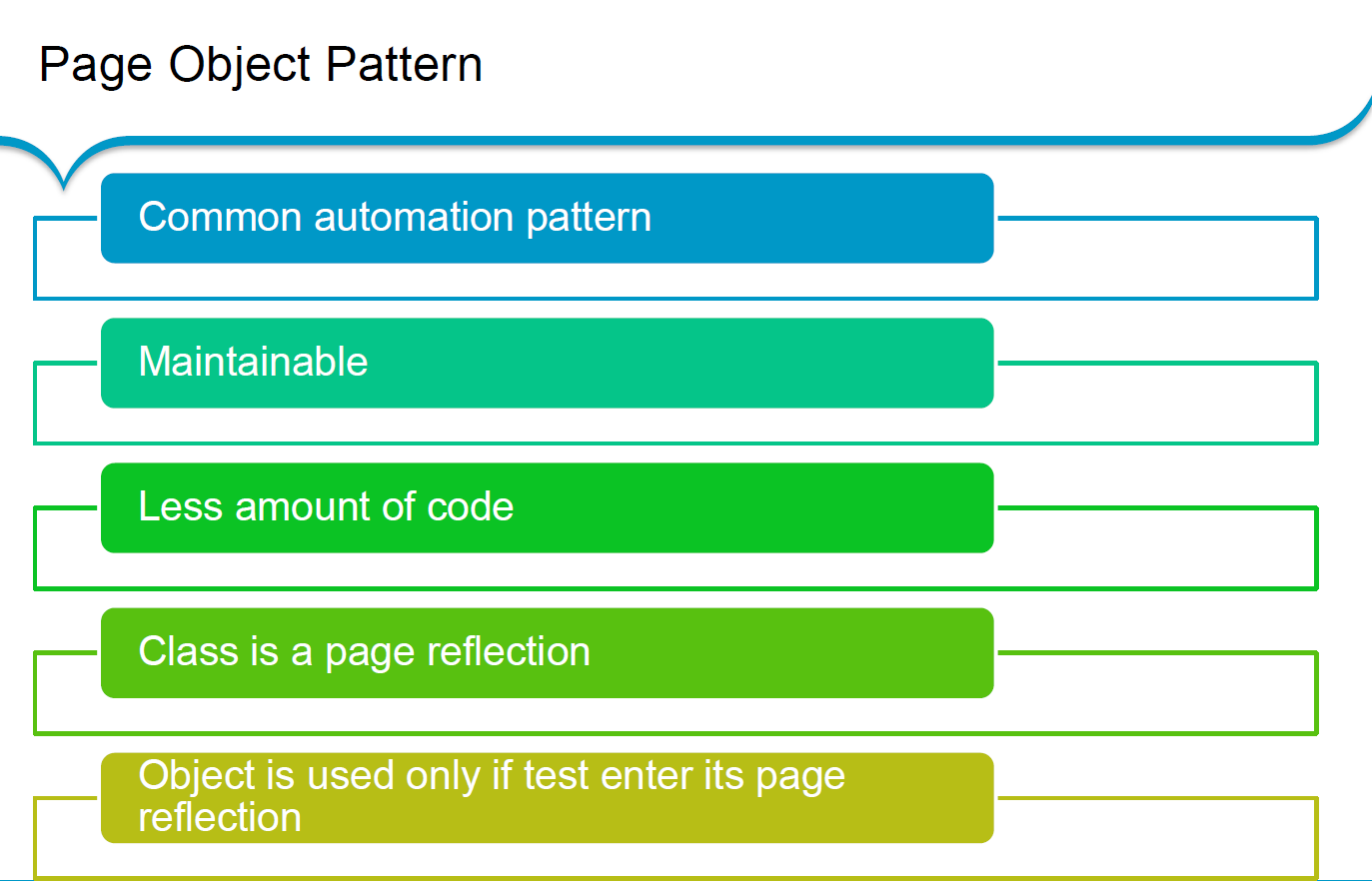

What is Page Object Model?

Creating Selenium test cases can result in an unmaintainable project. One of the reasons is that too much duplicated code is used. Duplicated code could result from duplicated functionality leading to duplicated usage of locators. The main disadvantage of duplicated code is that the project is less maintainable. If a locator changes, you have to walk through the whole test code to adjust locators where necessary. By using the page object model we can make non-brittle test code and reduce or eliminate duplicate test code. In addition, it improves the readability and allows us to create interactive documentation. Last but not least, we can create tests with less keystroke. An implementation of the page object model can be achieved by separating the abstraction of the test object and the test scripts.

Basic Web elements

This page will provide an overview of basic web elements.

| Name | Method to use element |

|---|---|

Form: Input Text |

|

Form: Label |

|

Form: Submit Button |

|

Page: Button |

|

Checkbox |

|

Radio |

|

Elements (Tabs, Cards, Account, etc.) |

|

Dropdown List |

|

Link |

- |

Combobox |

|

Comparision how picking value from checkbox can be done:

-

by classic Selenium atomic actions

-

by our enhanced Selenium wrapper

Classic Selenium atomic actions

List<WebElement> checkboxesList = getDriver()

.findElements(selectorHobby);

WebElement currentElement;

for (int i = 0; i < checkboxesList.size(); i++) {

currentElement = checkboxesList.get(i);

if (currentElement.getAttribute("value")

.equals(hobby.toString()) && currentElement.isSelected() != true)

{

currentElement.click();

}

}

Enhanced Selenium in E2E test framework

getDriver().elementCheckbox(selectorHobby) .setCheckBoxByValue(hobby.toString());

Framework Features

Page Class

Page Object Models allow for the representation of a webpage as a Java Class. The class contains all required web elements like buttons, textfields, labels, etc. When initializing a new project, create a new package to store the Page Object Models in.

Initialization

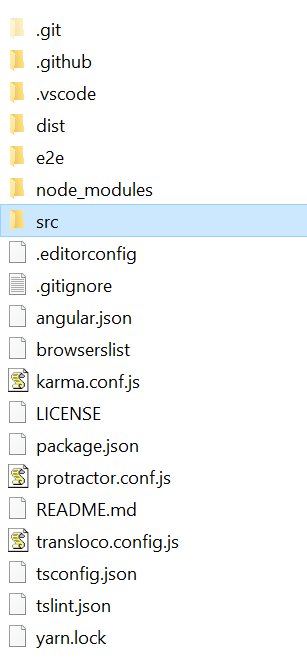

Source folder: allure-app-under-test/src/main/java

Name: com.example.selenium.pages.YOUR_PROJECT

Classes being created inside of this new package have to extend the BasePage class. As a result, a few abstract methods from BasePage have to be implemented.

public class DemoPage extends BasePage {

@Override

public boolean isLoaded() {

}

@Override

public void load() {

}

@Override

public String pageTitle() {

}

}

The example above demonstrates a minimum valid Page Object class with all required methods included.

The inherited method isLoaded() can be used to check if the current Page Object Model has been loaded correctly. There are multiple ways to verify a correctly loaded page. One example would be to compare the actual page title with the expected page title.

public boolean isLoaded() {

if(getDriver().getTitle().equals("EXPECTED_TITLE")) {

return true;

}

return false;

}

The method load() can be used to tell the webdriver to load a specific page.

public void load() {

getDriver().get("http://SOME_PAGE");

}

The pageTitle() method returns a String containing the page title.

Creating a selector variable

To initialize web elements, a large variety of selectors can be used.

We recommend creating a private and constant field for every web element you’d like to represent in Java. Use the guide above to find the preferred selector and place it in the code below at "WEB_ELEMENT_SELECTOR".

private static final By someWebElementSelector = By.CSS("WEB_ELEMENT_SELECTOR");

As soon as you create the selector above, you can make use of it to initialize a WebElement object.

WebElement someWebElement = getDriver().findDynamicElement(someWebElementSelector);

Note: The examples displayed in the cssSelector.docx file use the Selenium method driver.findElement() to find elements. However, using this framework we recommend findDynamicElement() or findQuietlyElement().findDynamicElement() allows waiting for dynamic elements, for example buttons that pop up.

Creating a page method

To interact with the page object, we recommend creating methods for each action.

public void enterGoogleSearchInput(String query) {

...

}

Creating a method like the one above allows the test case to run something like googleSearchPage.enterGoogleSearchInput("Hello") to interact with the page object.

Naming Conventions

For code uniformity and readability, we provide a few method naming conventions.

| Element | Action | Name (example) |

|---|---|---|

Form: Input text |

enter |

|

is (label) |

|

|

is (value) |

|

|

get |

|

|

Form: Label |

get |

|

is (value) |

|

|

is (label) |

|

|

Form: Submit Button |

submit |

|

is |

|

|

Page: Button |

click |

|

is |

|

|

Checkbox |

set |

|

unset |

|

|

is (present) |

|

|

is (value) |

|

|

Radio |

set |

|

is (present) |

|

|

is (visible) |

|

|

get |

|

|

Elements (Tabs, Cards, Account, etc.) |

click |

|

is |

|

|

Dropdown List |

select |

|

unselect |

|

|

multiple select |

|

|

is (list) |

|

|

is (element present) |

|

|

is (element selected) |

|

|

Link |

click |

|

is |

|

|

Combobox |

select |

|

is (present) |

|

|

is (contain) |

|

|

Element Attribute |

get |

|

get |

|

A css selector is used to select elements from an HTML page.

Selection by element tag, class or id are the most common selectors.

<p class='myText' id='123'>

This text element (p) can be found by using any one of the following selectors:

The HTML element: "p". Note: in practical use this will be too generic, if a preceding text section is added, the selected element will change. The class attribute preceded by ".": ".myText" The id attribute preceded by "#": "#123"

Using other attributes

When a class or an id attribute is not sufficient to identify an element, other attributes can be used as well, by using "[attribute=value]": For example:

<a href='https://ns.nl/example.html'>

This can be selected by using the entire value: "a[href='https://ns.nl/example.html'\]". For selecting links starting with, containing, ending with see the list below.

Using sub-elements

The css selectors can be stacked, by appending them:

<div id='1'><a href='ns.nl'></div> <div id='2'><a href='nsinternational.nl'></div>

In the example above, the link element to nsinternational can be obtained with: "#2 a".

When possible avoid

-

Using paths of commonly used HTML elements within the containers (HTML: div). This will cause failures when a container is added, a common occurrence during development, e.g. "div div p". Use class or id instead, if those are not available, request them to be added in the production code.

-

Magic order numbers. It is possible to get the second text element in its parent container by using the selector "p:nth-child(2)". If the items are representing different items, ask the developer to add specific attributes. It is also possible to request all items, with a selector similar to ".myList li", and iterate through them later.

List

A good list with CSS Selectors can be found at W3Schools:

https://www.w3schools.com/cssref/css_selectors.asp

Selenium UFT Comparison

| Subject | HP UFT | HP LeanFT | Selenium | Selenium IDE |

|---|---|---|---|---|

Language |

VBScript |

Same as Selenium |

Supports several languages. Java |

Javascript |

Learning curve |

Based on VBScript which is relatively easy to learn |

Less intuitive, more coding knowledge necessary |

Less intuitive, more coding skills necessary |

Record/playback possible. Generated code difficult to maintain |

Project type |

Traditional |

Agile |

Agile |

Agile |

User oriented |

More Tester |

More Developer |

More Developer |

More Tester |

Object recognition |

Test object identification and storage in object repository |

Same as UFT |

With Firebug |

Same as SE |

Customizations |

Only the available standard. No custimization |

Same as UFT |

Lots of customizations possible |

Fewer then SE |

Framework |

Needed. Exists in ATaaS |

Needed. Integration with Fitnesse, Cucumber, Gauche |

No Framework. Limited capabilities of the tool. |

|

Operating System support |

Runs on Windows |

Runs on Windows |

Multiple OS support. With Grid: testing on multiple devices at same time |

Plugin for Firefox |

Application coverage |

Many |

Many |

Web only |

Web only |

Multiple browsers |

In UFT 12.5 available |

In 12.5 available |

Multiple tests in multiple browser windows at once and faster support for new browser versions |

Multiple tests in multiple browser windows at once and faster support for new browser versions |

System Load |

High system load (RAM & CPU usage) |

Lower load than HP UFT? |

Lower load than HP UFT |

Lower load than HP UFT |

ALM integration |

With HP ALM – full integration |

Jira, Jenkins Not with ALM tool |

Same as SE |

|

Integration with other tools |

A lot can be built, but many are already covered. |

More than UFT. |

Freeware and can be integrated with different open source tools |

Freeware and can be integrated with different open source tools |

Addins |

Add-ins necessary to access all capabilities of the tool – license related |

Same as UFT |

See integration with other tools |

See integration with other tools |

Reporting |

Complete, link to ALM |

Same as UFT |

No native mechanism for generating reports, but multiple plugins available for reporting |

No native mechanism for generating reports, but multiple plugins available for reporting |

Support |

HP full support |

Same as UFT |

Limited support as it is open source |

Limited support as it is open source |

License costs |

About 17K – Capgemini price 5K. Included in the S2 service charge |

Same price as HP UFT |

Free |

Free limited functionality (no iterations / conditional statements) |

iVAL Service |

ATaaS |

Not in a S2 service |

Not in a S2 service |

Not in a S2 service |

Bold for key differentiators.

Projects also choose an available resource and the knowledge of that resource.

Both: Framework determines the quality of automation. Needs to be set up by someone with experience with the tool

Run on different browsers

To execute each test with a chosen installed browser, specific arguments are required in Run configuration.

It is necessary to enter -Dbrowser= with browser parameter name as an argument (in 'Arguments' tab):

firefox ie phantomjs chrome chromeheadless For example: -Dbrowser=ie

_-ea_ should be entered as an argument to restore default settings.

Browser options

To run a browser with specific options during runtime, please use

-DbrowserOptions="< options >"

> mvn test -DbrowserOptions="param1" > mvn test -DbrowserOptions="param1=value1"

examples:

-

One parameter -DbrowserOptions="headless"

-

One parameter -DbrowserOptions="--incognito"

-

Many parameters -DbrowserOptions="headless;param1=value1;testEquals=FirstEquals=SecondEquals;--testMe"

List of options/capabilites supported by:

Run with full range of resolution

In order to execute tests in different browser resolutions, it is required to provide these resolutions as a test parameter.

Test example with resolutions included may be found in ResolutionTest test class

Example of resolution notation is available in ResolutionEnum class

Test with given resolution parameters will be launched as many times as the number of resolutions provided.

Selenium Best Practices

The following table displays a few best practices that should be taken into consideration when developing Selenium test cases.

| Best Practices | Description |

|---|---|

"Keep it Simple" |

Do not force use every Selenium feature available - Plan before creating the actual test cases |

Using Cucumber |

Cucumber can be used to create initial testcases for further decision making |

Supporting multiple browsers |

Test on multiple browsers (in parallel, if applicable) if the application is expected to support multiple environments |

Test reporting |

Make use of test reporting modules like Junit which is included in the framework |

Maintainability |

Always be aware of the maintainability of tests - You should always be able to adapt to changes |

Testing types |

Which tests should be created? Rule of thumb: 70% Unit test cases, 20% Integration test cases and 10% UI Test cases |

Test data |

Consider before actually developing tests and choosing tools: Where to get test data from, how to reset test data |

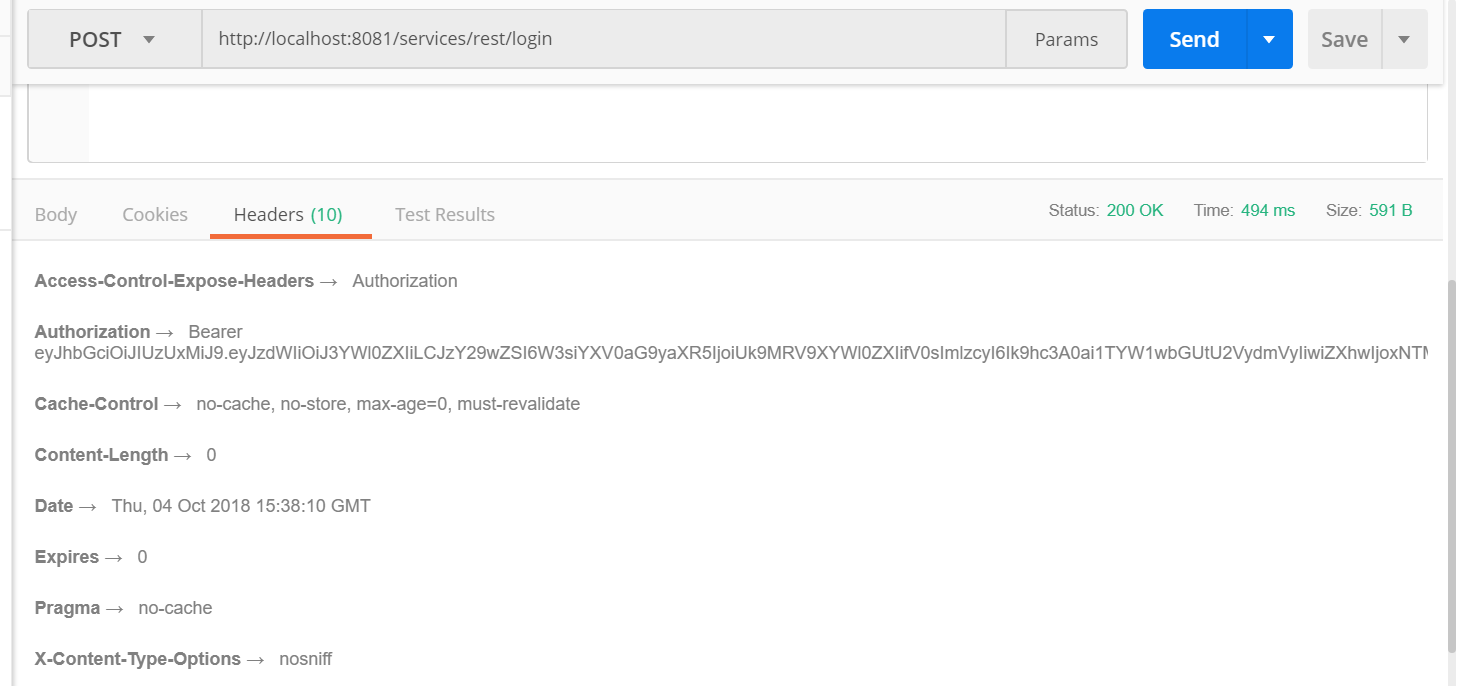

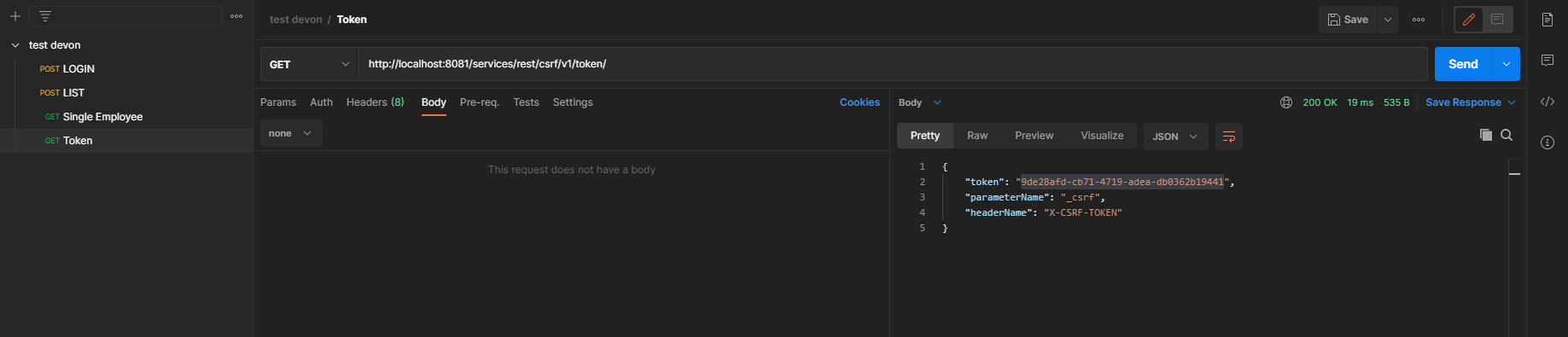

Web API Module

Service Virtualization

Is it doable to keep pace in QA with today’s software agile approach?

DevOps + Microservices + Shift left + Time to Market == ? Service virtualization ?

Test pyramid

What is service virtualization

Service Virtualization has become recognized as one of the best ways to speed up testing and accelerate your time to market.

Service virtualization lets you automatically execute tests even when the application under test’s dependent system components (APIs, third-party applications, etc.) cannot be properly accessed or configured for testing. By simulating these dependencies, you can ensure that your tests will encounter the appropriate dependency behaviour and data each and every time that they execute.

Service virtualization is the simulation of interfaces – not the virtualization of systems.

According to Wikipedia’s service virtualization entry: Service virtualization emulates the behaviour of software components to remove dependency constraints on development and testing teams. Such constraints occur in complex, interdependent environments when a component connected to the application under test is:

-

Not yet completed

-

Still evolving

-

Controlled by a third-party or partner

-

Available for testing only in a limited capacity or at inconvenient times

-

Difficult to provision or configure in a test environment

-

Needed for simultaneous access by different teams with varied test data setup and other requirements

-

Restricted or costly to use for load and performance testing

For instance, instead of virtualizing an entire database (and performing all associated test data management as well as setting up the database for every test session), you monitor how the application interacts with the database, then you emulate the related database behaviour (the SQL queries that are passed to the database, the corresponding result sets that are returned, and so forth).

Mocks, stubs and virtual services

The most commonly discussed categories of test doubles are mocks, stubs and virtual services.

Stub: a minimal implementation of an interface that normally returns hardcoded data that is tightly coupled to the test suite. It is most useful when the suite of tests is simple and keeping the hardcoded data in the stub is not an issue. Some stubs are handwritten; some can be generated by tools. A stub is normally written by a developer for personal use. It can be shared with testers, but wider sharing is typically limited by interoperability issues related to software platform and deployment infrastructure dependencies that were hardcoded. A common practice is when a stub works in-process directly with classes, methods, and functions for the unit, module, and acceptance testing. Some developers will say that a stub can also be primed, but you cannot verify an invocation on a stub. Stubs can also be communicating "over the wire", for example, HTTP, but some would argue that they should be called virtual services in that case.

Mock: a programmable interface observer, that verifies outputs against expectations defined by the test. It is frequently created using a third party library, for example in Java that is Mockito, JMock or WireMock. It is most useful when you have a large suite of tests and a stub will not be sufficient because each test needs a different data set up and maintaining them in a stub would be costly. The mock lets us keep the data set-up in the test. A mock is normally written by a developer for personal use but it can be shared with testers. However, wider sharing is typically limited by interoperability issues related to software platform and deployment infrastructure dependencies that were hardcoded. They are most often work-in-progress directly with classes, methods, and functions for a unit, module, and acceptance testing. Mock provides responses based on a given request satisfying predefined criteria (also called request or parameter matching). A mock also focuses on interactions rather than state so mocks are usually stateful. For example, you can verify how many times a given method was called or the order of calls made to a given object.

Virtual service: a test double often provided as a Software-as-a-Service (SaaS), is always called remotely, and is never working in-process directly with methods or functions. A virtual service is often created by recording traffic using one of the service virtualization platforms instead of building the interaction pattern from scratch based on interface or API documentation. A virtual service can be used to establish a common ground for teams to communicate and facilitate artefact sharing with other development teams as well as testing teams. A virtual service is called remotely (over HTTP, TCP, etc.) normally supports multiple protocols (e.g. HTTP, MQ, TCP, etc.), while a stub or mock normally supports only one. Sometimes virtual services will require users to authorize, especially when deployed in environments with enterprise-wide visibility. Service virtualization tools used to create virtual services will most often have user interfaces that allow less tech-savvy software testers to hit the ground running, before diving into the details of how specific protocols work. They are sometimes backed by a database. They can also simulate non-functional characteristics of systems such as response times or slow connections. You can sometimes find virtual services that provide a set of stubbed responses for given request criteria and pass every other request to a live backend system (partial stubbing). Similar to mocks, virtual services can have quite complex request matchers, that allow having one response returned for many different types of requests. Sometimes, virtual services simulate system behaviours by constructing parts of the response based on request attributes and data.

It is often difficult to say definitely which of the following categories a test double fits into. They should be treated as a spectrum rather than strict definitions.

Plug in service virtualization

Classic application structure

This is a quite common application structure, where we have any of the following in Application Under Test (AUT):

-

UI / GUI

-

WebAPI

-

3rd party service

Classic application structure with virtualization

This classic application is quite fragile for development and/or test process. Especially so, if the component (WebAPI) connected to the Application Under Test is:

-

Not yet completed

-

Still evolving

-

Controlled by a third-party or partner

-

Available for testing only in limited capacity or at inconvenient times

-

Difficult to provision or configure in a test environment

-

Needed for simultaneous access by different teams with varied test data setup and other requirements

-

Restricted or costly to use for load and performance testing

You can find the full list of such "classic application structure" limitations here What-is-service-virtualization.

*Service virtualization is the key solution to address such a list of impediments. *

For simplicity, AUT connects to other components by TCP/IP protocol. Therefore AUT has an IP address and port number where given components operate. To plug in virtualization server, the author of AUT ought to switch IP and port to "proxy server" instead of real endpoint component (WebAPI) . Finally, "proxy server" maps requests come from AUT with either virtual assets or real endpoint component (WebAPI). How do maps work in such a "proxy server"? Have a look here How-to-make-virtual-asset

Therefore AUT is build either with:

-

switchable property file acquired on startup

or

-

"on the fly" operation to change IP and ports of connected components.

Classic APP structure with full scope - Binding in service virtualization

How to make a virtual asset

This can be done in four ways:

-

Record all traffic (Mappings and Responses) that comes through proxy - by UI

-

Record all traffic (Mappings and Responses) that comes through proxy - by Code

-

Create Mappings and Responses manually by text files

-

Create Mappings and Responses manually by code

Record all traffic (Mappings and Responses) that comes through proxy - UI

Full article here Wiremock record-playback.

First, start an instance of WireMock running standalone. Once that’s running, visit the recorder UI page at http://localhost:8080/__admin/recorder (assuming you started WireMock on the default port of 8080).

Enter the URL you wish to record from in the target URL field and click the Record button. You can use http://example.mocklab.io to try it out.

Now you need to make a request through WireMock to the target API so that it can be recorded. If you’re using the example URL, you can generate a request using curl:

$ curl http://localhost:8080/recordables/123

Now click stop. You should see a message indicating that one stub was captured.

You should also see that a file has been created called something like recordables_123-40a93c4a-d378-4e07-8321-6158d5dbcb29.json under the mappings directory created when WireMock started up, and that a new mapping has appeared at http://localhost:8080/__admin/mappings.

Requesting the same URL again (possibly disabling your wifi first if you want a firm proof) will now serve the recorded result:

$ curl http://localhost:8080/recordables/123

{

"message": "Congratulations on your first recording!"

}

Record all traffic (Mappings and Responses) that comes through proxy - by Code

An example of how such a record can be achieved

@Test

public void startRecording() {

SnapshotRecordResult recordedMappings;

DriverManager.getDriverVirtualService()

.start();

DriverManager.getDriverVirtualService()

.startRecording("http://example.mocklab.io");

recordedMappings = DriverManager.getDriverVirtualService()

.stopRecording();

BFLogger.logDebug("Recorded messages: " + recordedMappings.toString());

}

Create Mappings and Responses manually by text files

EMPTY

Create Mappings and Responses manually by code

Link to full file structure: REST_FarenheitToCelsiusMethod_Test.java

public void startVirtualServer() {

// Start Virtual Server

WireMockServer driverVirtualService = DriverManager.getDriverVirtualService();

// Get Virtual Server running http and https ports

int httpPort = driverVirtualService.port();

int httpsPort = driverVirtualService.httpsPort();

// Print is Virtual server running

BFLogger.logDebug("Is Virtual server running: " + driverVirtualService.isRunning());

String baseURI = "http://localhost";

endpointBaseUri = baseURI + ":" + httpPort;

}

REST_FarenheitToCelsiusMethod_Test.java

public void activateVirtualAsset() {

/*

* ----------

* Mock response. Map request with virtual asset from file

* -----------

*/

BFLogger.logInfo("#1 Create Stub content message");

BFLogger.logInfo("#2 Add resource to virtual server");

String restResourceUrl = "/some/thing";

String restResponseBody = "{ \"FahrenheitToCelsiusResponse\":{\"FahrenheitToCelsiusResult\":37.7777777777778}}";

new StubREST_Builder //For active virtual server ...

.StubBuilder(restResourceUrl) //Activate mapping, for this Url AND

.setResponse(restResponseBody) //Send this response AND

.setStatusCode(200) // With status code 200 FINALLY

.build(); //Set and save mapping.

}

Link to full file structure: StubREST_Builder.java

Source link to How to create Stub.

StubREST_Builder.java

public class StubREST_Builder {

// required parameters

private String endpointURI;

// optional parameters

private int statusCode;

public String getEndpointURI() {

return endpointURI;

}

public int getStatusCode() {

return statusCode;

}

private StubREST_Builder(StubBuilder builder) {

this.endpointURI = builder.endpointURI;

this.statusCode = builder.statusCode;

}

// Builder Class

public static class StubBuilder {

// required parameters

private String endpointURI;

// optional parameters

private int statusCode = 200;

private String response = "{ \"message\": \"Hello\" }";

public StubBuilder(String endpointURI) {

this.endpointURI = endpointURI;

}

public StubBuilder setStatusCode(int statusCode) {

this.statusCode = statusCode;

return this;

}

public StubBuilder setResponse(String response) {

this.response = response;

return this;

}

public StubREST_Builder build() {

// GET

DriverManager.getDriverVirtualService()

.givenThat(

// Given that request with ...

get(urlMatching(this.endpointURI))

.withHeader("Content-Type", equalTo(ContentType.JSON.toString()))

// Return given response ...

.willReturn(aResponse()

.withStatus(this.statusCode)

.withHeader("Content-Type", ContentType.JSON.toString())

.withBody(this.response)

.withTransformers("body-transformer")));

// POST

DriverManager.getDriverVirtualService()

.givenThat(

// Given that request with ...

post(urlMatching(this.endpointURI))

.withHeader("Content-Type", equalTo(ContentType.JSON.toString()))

// Return given response ...

.willReturn(aResponse()

.withStatus(this.statusCode)

.withHeader("Content-Type", ContentType.JSON.toString())

.withBody(this.response)

.withTransformers("body-transformer")));

// PUT

DriverManager.getDriverVirtualService()

.givenThat(

// Given that request with ...

put(urlMatching(this.endpointURI))

.withHeader("Content-Type", equalTo(ContentType.JSON.toString()))

// Return given response ...

.willReturn(aResponse()

.withStatus(this.statusCode)

.withHeader("Content-Type", ContentType.JSON.toString())

.withBody(this.response)

.withTransformers("body-transformer")));

// DELETE

DriverManager.getDriverVirtualService()

.givenThat(

// Given that request with ...

delete(urlMatching(this.endpointURI))

.withHeader("Content-Type", equalTo(ContentType.JSON.toString()))

// Return given response ...

.willReturn(aResponse()

.withStatus(this.statusCode)

.withHeader("Content-Type", ContentType.JSON.toString())

.withBody(this.response)

.withTransformers("body-transformer")));

// CATCH any other requests

DriverManager.getDriverVirtualService()

.givenThat(

any(anyUrl())

.atPriority(10)

.willReturn(aResponse()

.withStatus(404)

.withHeader("Content-Type", ContentType.JSON.toString())

.withBody("{\"status\":\"Error\",\"message\":\"Endpoint not found\"}")

.withTransformers("body-transformer")));

return new StubREST_Builder(this);

}

}

}

Start a virtual server

The following picture presents the process of executing Smoke Tests in a virtualized environment:

Install docker service

If docker is not already installed on machine (this should be checked during C2C creation), install docker, docker-compose, apache2-utils, openssl (You can use script to install docker & docker-compose OR refer to this post and add Alias for this machine <C2C_Alias_Name>):

-

run the script

-

sudo apt-get install -y apache2-utils

Build a docker image

Dockerfile:

FROM docker.xxx.com/ubuntu:16.04

MAINTAINER Maintainer Name "maintainer@email.address"

LABEL name=ubuntu_java \

version=v1-8.0 \

base="ubuntu:16.04" \

build_date="03-22-2018" \

java="1.8.0_162" \

wiremock="2.14.0" \

description="Docker to use with Ubuntu, JAVA and WIREMOCK "

# Update and install the applications needed

COPY 80proxy /etc/apt/apt.conf.d/80proxy

RUN apt-get update

RUN apt-get install -y \

wget \

libfontconfig \

unzip \

zip

ksh \

curl \

git

COPY wgetrc /etc/wgetrc

#Env parameters

### JAVA PART ###

#TO UPDATE:please verify url link to JDK http://www.oracle.com/technetwork/java/javase/downloads/jdk8-downloads-2133151.html

##Download and install JAVA JDK8

RUN mkdir /opt/jdk

RUN wget -qq --header "Cookie: oraclelicense=accept-securebackup-cookie" http://download.oracle.com/otn-pub/java/jdk/8u162-b12/0da788060d494f509bf8624735fa2f1/jdk-8u162-linux-x64.tar.gz && tar -zxf jdk-8u162-linux-x64.tar.gz -C /opt/jdk && rm jdk-8u162-linux-x64.tar.gz && update-alternatives --install /usr/bin/javac javac /opt/jdk/jdk1.8.0_162/bin/javac 100 && java -version && chmod 755 -R /opt/jdk/jdk1.8.0_162/

RUN java -version

##Add user

RUN useradd -u 29001 -g 100 srvpwiredev

##Add app

RUN mkdir -p -m 777 /app

COPY wiremock-standalone-2.14.0.jar /app/wiremock-standalone-2.14.0.jar

##Expose port

EXPOSE 8080

##Set workdir

WORKDIR /App

##Run app

CDM java -jar /app/wiremock-standalone-2.14.0.jar

Execute the following steps with a specified version to build a docker image and push it to the repository :

## Build image sudo docker build -t docker.xxx.com/app/build/wiremock:v2.14.0. ## Push image sudo docker login docker.xxx.com sudo docker push docker.xxx.com/app/build/wiremock:v2.14.0.

Run docker image

To run a docker image, execute the following command:

sudo docker run -td -p 8080:8080 -v /home/wiremock/repo/app/docker/QA/mappings:/app/mappings -v /home/wiremock/repo/app/docker/QA/__files:/app/__files --restart always docker.xxx.com/app/build/wiremock:v2.14.0.

Where:

-p - publish a container’s port to the host

-v - bind mount a volume. WireMock server creates two directories under the current one: mappings and __files. It is necessary to mount directories with already created mappings and responses to make it work.

-restart always - restart policy to apply when a container exists

All of the parameters are described in: official docker documentation

Map requests with virtual assets

What is WireMock?

WireMock is an HTTP mock server. At its core it is a web server that can be primed to serve canned responses to particular requests (stubing) and that captures incoming requests so that they can be checked later (verification). It also has an assortment of other useful features including record/playback of interactions with other APIs, injection of faults and delays, simulation of stateful behaviour.

Full documentation can be found under the following xref:devonfw-guide/mrchecker.wiki/Who-Is-MrChecker/Test-Framework-Modules_ WireMock

Record / create virtual assets mappings

Record

WireMock can create stub mappings from requests it has received. Combined with its proxying feature, this allows you to "record" stub mappings from interaction with existing APIs.

Record and playback (Legacy): documentation

java -jar wiremock-standalone-2.16.0.jar --proxy-all="http://search.twitter.com" --record-mappings --verbose

Once it’s started and request is sent to it, it will be redirected to "http://search.twitter.com" and traffic (response) is saved to files in mappings and __files directories for further use.

Record and playback (New): documentation

Enable mappings in a virtual server

When the WireMock server starts, it creates two directories under the current one: mappings and __files. To create a stub, it is necessary to drop a file with a .json extension under mappings.

Run docker with mounted volumes

Mappings are in a repository. It is necessary to mount directories with already created mappings and responses to make it work:

sudo docker run -td -p 8080:8080 -v /home/wiremock/repo/app/docker/QA/mappings:/app/mappings -v /home/wiremock/repo/app/docker/QA/__files:/app/__files --restart always docker.xxx.com/app/build/wiremock:v2.14.0.

The description of how to build and run docker is available under: Docker run command description

Recorded mappings

Recorded mappings are kept in the project repository.

Create a user and map them to docker user

To enable the connection from Jenkins to Virtual Server (C2C), it is necessary to create a user and map them to docker group user. It can be done using the following command:

adduser -G docker -m wiremock

To set the password for a wiremock user:

passwd wiremock

Create SSH private and public keys for a wiremock user

SSH keys serve as a means of identifying yourself to an SSH server using public-key cryptography and challenge-response authentication. One immediate advantage this method has over traditional password is that you can be authenticated by the server without ever having to send your password over the network.

To create an SSH key, log in as wiremock (previously created user).

su wiremock

The .ssh directory is not by default created below user home directory. Therefore, it is necessary to create it:

mkdir ~/.ssh

Now we can proceed with creating an RSA key using ssh-keygen (a tool for creating new authentication key pairs for SSH):

ssh-keygen -t rsa

A key should be created under /.ssh/id_rsa Appending the public keys to authorized_keys:

wiremock@vc2crptXXXXXXXn:~/ssh$ cat id_rsa.pub >> authorized_keys

Install an SSH key in Jenkins

To add an SSH key to Jenkins, go to credentials in your job location. Choose the folder within credentials, then 'global credentials', 'Add credentials'. Fill in the fields. Finally, the entry should be created.

Build a Jenkins Groovy script

The description of how to use SSH Agent plugin in Jenkins pipeline can be found under: https://www.karthikeyan.tech/2017/09/ssh-agent-blue-ocean-via-jenkins.html

Example of use:

sshagent (credentials: [env.WIREMOCK_CREDENTIALS]) {

sh """

ssh -T -o StrictHostKeyChecking=no -l ${env.WIREMOCK_USERNAME} ${env.WIREMOCK_IP_ADDRESS} "docker container restart ${env.WIREMOCK_CONTAINER_NAME}"

"""

}

Where: env.WIREMOCK_CREDENTIALS is a credential id of previously created wiremock credentials. Now that it is present, we can execute commands on a remote machine, where in ssh command: env.WIREMOCK_USERNAME - user name of user connected with configured private key env.WIREMOCK_IP_ADDRESS - ip address of the machine where this user with this private key exists

Pull repository with virtual assets

To pull the repository on a remote machine, it is necessary to use the previously described SSH Agent plugin. An example of use:

sshagent (credentials: [env.WIREMOCK_CREDENTIALS]) {

withCredentials([usernamePassword(credentialsId: end.STASH_CREDENTIALS, passwordVariable: 'PASS', usernameVariable: 'USER')]) {

sh """

ssh -T -o StrictHostKeyChecking=no -l ${env.WIREMOCK_USERNAME} ${env.WIREMOCK_IP_ADDRESS} "cd ~/${env.APPLICATION_DIRECTORY_WIREMOCK}/${env.PROJET_HOME}; git fetch https://&USER:$PASS@${env.GIT_WITHOUT_HTTPS} ${env.GIT_BRANCH}; git reset --hard FETCH_HEAD; git clean -df"

"""

}

}

Where:

withCredentials allows various kinds of credentials (secrets) to be used in idiosyncratic ways. Each binding will define an environment variable active within the scope of the step. Then the necessary commands are executed:

cd … - command will change from current directory to the specified directory with git repository

git fetch … ;git reset … ;git clean … - pull from GIT branch. Git pull or checkout are not used here to prevent the situation with wrong coding between Mac OSX/Linux etc.

PLEASE remember that when using this script for the first time, the code from previous block should be changed to:

stage("ssh-agent"){

sshagent (credentials: [env.WIREMOCK_CREDENTIALS]) {

withCredentials([usernamePassword(credentialsId: end.STASH_CREDENTIALS, passwordVariable: 'PASS', usernameVariable: 'USER')]) {

sh """

ssh -T -o StrictHostKeyChecking=no -l ${env.WIREMOCK_USERNAME} ${env.WIREMOCK_IP_ADDRESS} "cd ~/${env.APPLICATION_DIRECTORY_WIREMOCK} ;git clone --depth=1 --branch=develop https://&USER:$PASS@${env.GIT_WITHOUT_HTTPS}"';

"""

}

}

Install an application with Smoke environment

Update properties settings file

New settings file is pushed to the repository. Example configuration:

... <key>autocomplete</key> <string>http://server:port</string> <key>benefitsummary</key> <string>http://server:port</string> <key>checkscan</key> <string>http://server:port</string> <key>dpesb</key> <string>http://server:port</string> ...

Address of service (backend) should be changed to wiremock address as it is shown on listing to change the default route.

Build an application with updated properties file

New versions of application are prepared by Jenkins job.

Install an application on target properties file

Installation of an application is actually executed in a non-automated way using SeeTest environment.

UI tests

Run Jenkins job

Jenkinsfile:

// Jenkins parameters are overriding the properties below

def properties = [

JENKINS_LABELS : 'PWI_LINUX_DEV',

APPLICATION_FOLDER : 'app_dir',

PROJECT_HOME : 'app_home_folder',

//WIREMOCK

WIREMOCK_CREDENTIALS : 'vc2crptXXXXXXn',

WIREMOCK_USERNAME : 'wiremock',

WIREMOCK_ADDRESS : 'http://vc2crptXXXXXXn.xxx.com:8080',

WIREMOCK_IP_ADDRESS : '10.196.67.XXX',

WIREMOCK_CONTAINER_NAME : 'wiremock',

APPLICATION_DIRECTORY_WIREMOCK : 'repo',

//GIT

GIT_CREDENTIALS : 'e47742cc-bb66-4321-2341-a2342er24f2',

GIT_BRANCH : 'develop',

GIT_SSH : 'ssh://git@stash.xxx.com/app/app.git'

GIT_HTTPS : 'HTTPS://git@stash.xxx.com/app/app.git',

STASH_CREDENTIALS : 'e47742cc-bb66-4321-2341-a2342er24f2',

//DOCKER

ARTIFACTORY_USER_CREDENTIALS : 'e47742cc-bb66-4321-2341-a2342er24f2',

SEETEST_DOCKER_IMAGE : 'docker.xxx.com/project/images/app:v1-8.3',

//SEETEST_DOCKER_IMAGE

SEETEST_APPLICATION_FOLDER : 'seetest_dir',

SEETEST_PROJECT_HOME : 'Automated Scripts',

SEETEST_GIT_SSH : 'ssh://git@stash.xxx.com/pr/seetest_automation_cucumber.git'

SEETEST_GIT_BRANCH : 'develop',

SEETEST_GRID_USER_CREDENTIALS : 'e47742cc-bb66-4321-2341-a2342er24f2',

SEETEST_CUCUMBER_TAG : '@Virtualization',

SEETEST_CLOUD_NAME : 'Core Group',

SEETEST_IOS_VERSION : '11',

SEETEST_IOS_APP_URL : '',

SEETEST_INSTALL_APP : 'No',

SEETEST_APP_ENVIRONMENT : 'SmokeTests',

SEETEST_DEVICE_QUERY : '',

]

node(properties.JENKINS_LABELS) {

try {

prepareEnv(properties)

gitCheckout()

stageStartVirtualServer()

stageMapApiRequests()

stageInstallApplication()

stageUITests()

} catch(Exception ex) {

currentBuild.result = 'FAILURE'

error = 'Error' + ex

}

}

//====================================END OF PIPELINE==========================================

private void prepareEnv(properties) {

cleanWorkspace()

overrideProperties(properties)

setWorkspace()

}

private void gitCheckout() {

dir(env.APPLICATION_FOLDER) {

checkout([$class: 'GitSCM', branches: [[devonfw-guide_mrchecker.wiki_Who-Is-MrChecker_Test-Framework-Modules_Web-API-Test-Module-Smoke-Tests-virtualization.asciidoc_name: env.GIT_BRANCH]], doGenerateSubmoduleConfiguration: false, extensions: [[devonfw-guide_mrchecker.wiki_Who-Is-MrChecker_Test-Framework-Modules_Web-API-Test-Module-Smoke-Tests-virtualization.asciidoc_$class: 'CloneOption', depth: 0, noTags: false, reference: '', shallow: false, timeout: 50]], gitTool: 'Default', submoduleCfg: [], userRemoteConfigs: [[devonfw-guide_mrchecker.wiki_Who-Is-MrChecker_Test-Framework-Modules_Web-API-Test-Module-Smoke-Tests-virtualization.asciidoc_credentialsId: env.GIT_CREDENTIALS, url: env.GIT_SSH]])

}

}

private void stageStartVirtualServer() {

def module = load "${env.SUBMODULES_DIR}/stageStartVirtualServer.groovy"

module()

}

private void stageMapApiRequests() {

def module = load "${env.SUBMODULES_DIR}/stageMapApiRequests.groovy"

module()

}

private void stageInstallApplication() {

def module = load "${env.SUBMODULES_DIR}/stageInstallApplication.groovy"

module()

}

private void stageUITests() {

def module = load "${env.SUBMODULES_DIR}/stageUITests.groovy"

module()

}

private void setWorkspace() {

String workspace = pwd()

env.APPLICATION_DIRECTORY = "/${env.APPLICATION_DIRECTORY}"

env.WORKSPACE_LOCAL - workspace + env.APPLICATION_DIRECTORY

env.SEETEST_PROJECT_HOME_ABSOLute_PATH = "${workspace}/${env.SEETEST_APPLICATION_FOLDER}/${env.SEETEST_PROJECT_HOME}"

env.SUBMODULES_DIR = env.WORKSPACE_LOCAL + "/pipelines/SmokeTests.submodules"

env.COMMONS_DIR = env.WORKSPACE_LOCAL + "/pipelines/commons"

}

/*

function ovverrides env vales based on provided properties

*/

private void overrideProperties(properties) {

for (param in properties) {

if (env.(param.key) == null) {

echo "Adding parameter '${param.key}' with default value: '$param.value}'"

env.(param.key) = param.value

} else {

echo "Parameter '${param.key}' has overriden value: '${env.(param.key)}'"

}

}

echo sh(script: "env | sort", returnStdout: true)

}

private void cleanWorkspace() {

sh 'rm-rf *'

}

stageStartVirtualServer.groovy:

def call () {

stage("Check virtual server") {

def statusCode

try {

def response = httpRequest "${env.WIREMOCK_ADDRESS}/__admin/"

statusCode = response.status

} catch(Exception ex) {

currentBuild.result = 'FAILURE'

error 'WireMock server os unreachable.'

}

if(statusCode !=200) {

currentBuild.result = 'FAILURE'

error 'WireMock server is unreachable. Return code: ${statusCode}'

}

}

}

stageMapApiRequests.groovy:

def call() {

stage("Map API requests with virtual assets") {

checkoutRepository()

restartWiremock()

checkWiremockStatus()

}

}